Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Phone Numbers

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

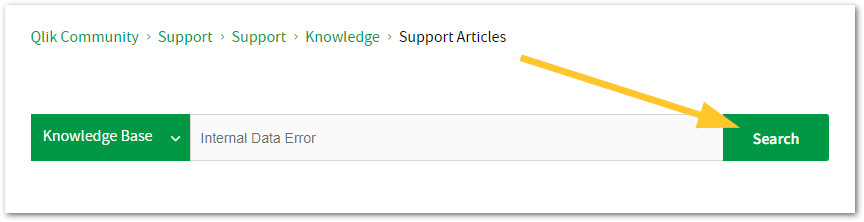

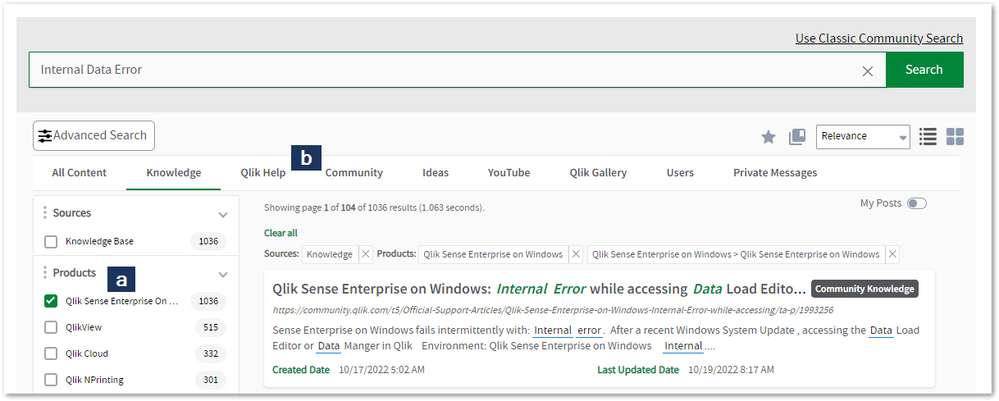

Looking for content? Type your question into our global search bar:

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to register.myqlik.qlik.com

If you already have an account, please see How To Reset The Password of a Qlik Account for help using your existing account. - You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

Log in to manage and track your active cases in the Case Portal. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Phone Numbers

When other Support Channels are down for maintenance, please contact us via phone for high severity production-down concerns.

- Qlik Data Analytics: 1-877-754-5843

- Qlik Data Integration: 1-781-730-4060

- Talend AMER Region: 1-800-810-3065

- Talend UK Region: 44-800-098-8473

- Talend APAC Region: 65-800-492-2269

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

Qlik Talend Job Conductor Fails to Clean Generated Jobs and ExecutionLogs

In Job Conductor, Job log file cleaner is no longer occuring and resulting in files piling up and filling-up filesystem when installing Talend V8 R202... Show MoreIn Job Conductor, Job log file cleaner is no longer occuring and resulting in files piling up and filling-up filesystem when installing Talend V8 R2025-01, executionLogs and generatedJobs are not cleaned as expected

Resolution

Apply TAC R2025-04 and later patch to solve this issue.

Cause

It is a known jira issue that File cleaner doesn`t work as expected due to regexp matcher to escape file

Internal Investigation ID(s)

Jira Issue: QTAC-966

Environment

-

Qlik Talend Administration Center LDAP Login Failure MSG_04177_CONNECTION_TIMEOU...

Talend Administration Center is facing LDAP login failure with the error log ERROR LoginHandler - LDAP user authenticate fail:org.apache.directory.lda... Show MoreTalend Administration Center is facing LDAP login failure with the error log

ERROR LoginHandler - LDAP user authenticate fail:

org.apache.directory.ldap.client.api.exception.LdapConnectionTimeOutException: MSG_04177_CONNECTION_TIMEOUT (2000)

Resolution

For LDAP connection timeout, default: 2000 ms issue, please follow below steps to increase ldap.config.timeout value from configuration table in Talend Administration Center DB.

- Tune the default 2000 ms timeout to 10000 ms

UPDATE `tacdb`.`configuration` SET `value` = '10000' WHERE (`key` = 'ldap.config.timeout'); Unit: "ms"

- Restart Talend Administration Center to take effect

Cause

Potential Causes

- Network Unreachable / firewall, the LDAP server is not reachable from the client due to network issues or firewall rules blocking port 389 (LDAP) or 636 (LDAPS).

- Server LDAP server not running or overloaded, the server is down, unresponsive, or too busy to respond within 2 seconds.

- Timeout Setting Client timeout value is too low and the 2000 ms (2 seconds) timeout is insufficient for the current network latency.

Environment

- Tune the default 2000 ms timeout to 10000 ms

-

Security Rule Example: How to show data model viewer for published apps

How To Grant Users The Access To Data Model Viewer. With default security rules and settings, users can not see the data model for published apps. How... Show MoreHow To Grant Users The Access To Data Model Viewer.

With default security rules and settings, users can not see the data model for published apps. However, we can achieve this by creating/updating security rules through the Qlik Sense Management Console.

Resolution

Option 1: Create a new Security Rule

- Go to the Qlik Sense Management Console

- Open Security Rules

- Click Create new

- Create the following rule:

- Name = DataModelViewers

- Description = A description of your choice.

- Resource filter = App_*

- Actions = "Read","Update"

- Conditions = Users of your choice or a previously defined role you will tag the users with.

- Context = Both in hub and QMC or Only in hub

- Click Apply

Option 2: Tagging users as ContentAdmins

This requires a rework of the ContentAdmin rule and will provide far more permissions to users than Option 1. See ContentAdmin for details on what a ContentAdmin is allowed to do.

- Tag the users you want to be able to see data models with the ContentAdmin role.

- Go to the Security Rules

- Locate the default ContentAdmin rule and open it

- Modify the rule by changing QMC only to Both in hub and QMC

- Click Apply

-

Qlik Sense and Cloud Distribution Error: You do not have the privilege to read c...

To distribute Qlik Sense applications from on-premise to SaaS, you are trying to create a new deployment through the QMC section "Cloud Distribution"... Show MoreTo distribute Qlik Sense applications from on-premise to SaaS, you are trying to create a new deployment through the QMC section "Cloud Distribution"

When clicking on the Deployment setup, you are intermittently receiving an error:

You do not have the privilege to read content

When checking the Hybrid Deployment Service log located in %ProgramData%\Qlik\Sense\Log\HybridDeploymentService\Trace, you see errors when HDS tries to fetch a license from the repository.

397 20220217T094322.646+01:00 INFO qlikserver1 37 domain\SVC_QS "Request starting HTTP/1.1 GET https://localhost:5927/v1/license " 36644

400 20220217T094322.653+01:00 DEBUG qlikserver1 37 domain\SVC_QS Refreshing the license cache 36644

401 20220217T094322.654+01:00 DEBUG qlikserver1 37 domain\SVC_QS Getting a license from repository 36644

402 20220217T094322.670+01:00 WARN qlikserver1 33 domain\SVC_QS Could not get a license, statusCode = "Forbidden", reason = '"Forbidden"' 36644

403 20220217T094322.672+01:00 ERROR qlikserver1 33 domain\SVC_QS Error when getting a license: "Response status code does not indicate success: 403 (Forbidden)." 36644Environment

Qlik Sense Enterprise on Windows

Resolution

Log in to the server with the account running Qlik Sense services.

Open Internet Explorer and check the proxy configuration in settings > Internet options > connections tab > LAN settings.

Ensure the configuration is set correctly, according to your internal IT requirements.

Cause

Wrong proxy configuration set in the service account Windows profile.

Internal Investigation ID(s)

QB-9173

-

Cleaning the Qlik Alerting database

This article documents how to delete the data from your Qlik Alerting database. The attached script is set to retain data history for up to a hundred ... Show MoreThis article documents how to delete the data from your Qlik Alerting database. The attached script is set to retain data history for up to a hundred (100) scans. You can modify the script to keep more. We do not recommend reducing the number to below a hundred as that would negatively impact alert conditions and history.

Always backup your Qlik Alerting database before running the script. See How to take Backup and Restore Qlik Alerting MongoDB Database for details.

To configure and execute the script:

- Verify that NodeJS is installed on your machine

- Save the attached scriptRunner.js script in an easily accessible location, then open it

- Change the configuration of the script to include your dbURI and dbName:

'recordsToKeep':100, //enter values to keep in the datahistory table this values indicates number of the alert scan values dbURI: 'mongodb://127.0.0.1:27017', //enter the database URL of qlik alerting database dbName:'qlikalerting' // Update the database name if you have changed the default databse name for Qlik Alerting }

- Open a Windows Command prompt with elevated (administrator) permissions

- Switch to the location of your scriptRunner.js file and run it using the command:

node scriptRunner.js

The command creates a folder named alerting-databse-cleaner, creates app.js, installs the required dependencies (mongodb NodeJS drivers), and then runs the app.js file. - The result should be:

The first message logs the creation of the directory. We then log the installation of the npm package and list the number of records we want to keep in the database. This is followed by the MongoDB connection message and, lastly, shows if there is data that matches the previously set condition.

If there are fewer records than the recordsToKeep, nothing will be deleted, and the message No data available for deleting with given values is shown.

Not keeping the minimum of a hundred (100) scans for an alert will impact the Previous Scanned Conditions set during Alert Creation. (Fig 3)

Fig 3

Alert History Example

Environment

- Qlik Alerting

- Verify that NodeJS is installed on your machine

-

Security Rule Requirements for Promoting Community Sheets

In this scenario, the administrator would like to allow a user or set of users to have the needed permissions to promote community sheets to be a base... Show MoreIn this scenario, the administrator would like to allow a user or set of users to have the needed permissions to promote community sheets to be a base sheet.

Note: The ability promote a Community sheet to a Base sheet also comes with the ability to demote a Base sheet to a Community sheet.

Environment:- Qlik Sense Enterprise June 2018 or higher to leverage the new functionality present in those builds

Resolution:

- From a security rule perspective, the user or set of users needs the Approve action allowed for the relevant app objects.

- For an example way to accomplish this:

- Resource Filter: App.Object_*

- Actions: Approve

- Conditions: ((user.name="Kyle Cadice" and resource.app.stream.name="Everyone"))

- Context: Hub

This rule provides a single named user the right to promote Community sheets to become base sheets for apps in the Everyone stream. Further customization can be made to restrict this right to specific apps (e.g. ((user.name="Kyle Cadice" and resource.app.id="d0c33707-0836-4ab4-b15f-46bb2e02eda4"))) or providing the right to users of a particular group (e.g. ((user.group="QlikSenseDevelopers and resource.app.stream.name="Everyone"))) or providing the right to a named user for all apps (e.g. ((user.name="Kyle Cadice"))).

We in Qlik Support have virtually no scope when it comes to debugging or writing custom rules for customers. That level of implementation advice needs to be handled by the folks in Professional Services or Presales. That being said, this example is provided for demonstration purposes to explain specific scenarios. No Support or maintenance is implied or provided. Further customization is expected to be necessary and it is the responsibility of the end administrator to test and implement an appropriate rule for their specific use case.

-

Qlik Sense: Column empty when loading data from JSON file

A JSON file is loaded into Qlik Sense by selecting data through the Select Data dialog. All data is visible, as expected: After loading data into the... Show MoreA JSON file is loaded into Qlik Sense by selecting data through the Select Data dialog. All data is visible, as expected:

After loading data into the app, columns from the JSON file are now empty (null) in both the data model and data preview:

Resolution

There are multiple ways to resolve this issue:

- Avoid using dot in JSON attribute names. .

- Load JSON data with a wildcard. During wildcard load, the Analytics Engine will parse the JSON file and name the output columns, similar to the parsing done during the data select dialog preview. This approach loads all the columns from the JSON, so additional processing like a preceding load may be needed to limit the store data to the desired result.

Example:

LOAD resource.attributes.telemetry.sdk.language;

LOAD *

FROM [lib://DataFiles/example.json] (json);

Cause

The Analytics Engine parses the JSON file during the data selection process and correctly finds all the attributes in the JSON file. The columns are named based on the attribute value and path in the JSON file.

The JSON file in this case has value names containing the dot character."resource": {

"attributes": {

"telemetry.sdk.language": "python"

}

}

}

The Analytics Engine has no problem parsing this valid JSON structure. The generated script has explicit field names, including the value name in the JSON file.LOAD

resource.attributes.telemetry.sdk.language

FROM [lib://DataFiles/example.json] (json);During load, the Analytic Engine interprets the explicit field name as a path to a language child within an SDK object, as this is what the dot separator indicates.

As this path does not exist in the JSON file, the parser correctly returns NULL for the lookup.

"resource": {

"attributes": {

"telemetry" : {

"sdk": {

"language": "python"

}

}

}

}Environment

- Qlik Cloud Analytics

- Qlik Sense Enterprise on Windows

-

Qlik Replicate: Microsoft Fabric warehouse update conflicts and upload failures

The Microsoft Fabric Target endpoint may receive one of the following errors during transactions and while validating data: [TARGET_APPLY ]E: RetCode:... Show MoreThe Microsoft Fabric Target endpoint may receive one of the following errors during transactions and while validating data:

[TARGET_APPLY ]E: RetCode: SQL_ERROR SqlState: 42000 NativeError: 24556 Message: [Microsoft][ODBC Driver 18 for SQL Server][SQL Server]Snapshot isolation transaction aborted due to update conflict. Using snapshot isolation to access table 'F4801' directly or indirectly in database 'JDE_REP' can cause update conflicts if rows in that table have been deleted or updated by another concurrent transaction. Retry the transaction. [1022502] (ar_odbc_conn.c:844)

[TARGET_APPLY ]E: RetCode: SQL_ERROR SqlState: 42000 NativeError: 16507 Message: [Microsoft][ODBC Driver 18 for SQL Server][SQL Server]String or binary data would be truncated while reading column of type 'VARCHAR'. Check ANSI_WARNINGS option. Underlying data description: file 'https://storage.dfs.core.windows.net/qlik-prod/staging/Folder/0/CDC00000DC3.csv', column 'col2'. Truncated value: '"xxxxxxx'. Line: 1 Column: -1

[TARGET_APPLY ]E: RetCode: SQL_ERROR SqlState: 22018 NativeError: 245 Message: [Microsoft][ODBC Driver 18 for SQL Server][SQL Server]Conversion failed when converting the varchar value 'U' to data type int. Line: 1 Column: -1 [1022502] (ar_odbc_stmt.c:5090)

Resolution

Upgrade Qlik Replicate.

This has been resolved with patch 2024.11 SP02 and the new 2025.5 release, as well as any subsequent releases.

Cause

Fabric staging files are not removed correctly, resulting in malformed queries leading to these update statements producing the error. Malformed queries are also sending the wrong files, causing Microsoft Fabric to be unable to upload data to the target destination.

Internal Investigation ID(s)

SUPPORT-3557, SUPPORT-3654, SUPPORT-3670

Environment

- Qlik Replicate

-

What version of Qlik Sense Enterprise on Windows am I running?

Where can I find the installed version or service release of my Qlik Sense Enterprise on a Windows server? You can locate the Qlik Sense Enterprise o... Show MoreWhere can I find the installed version or service release of my Qlik Sense Enterprise on a Windows server?

You can locate the Qlik Sense Enterprise on Windows version in three locations:

- The Qlik Sense Hub

- The Qlik Sense Enterprise Management Console

- The Windows Installed Programs menu

- The Engine.exe on disk

The Qlik Sense Hub

This option is only available for versions of Qlik Sense before Qlik Sense May 2025 Patch 3.

- Open the Qlik Sense Hub

- Locate and click the Profile on the landing screen (corner on the right)

- Click the About link

- The hub will display the installed version

The Qlik Sense Management Console

- Open the Qlik Sense Management Console.

- On the start screen, look in the bottom right corner for the version number.

The Windows Installed Programs menu

- Open the Windows Uninstall or change a program menu

- Expand the window until the version is visible

The Engine.exe

- Open a Windows File explorer

- Navigate to C:\Program Files\Qlik\Sense\Engine

- Right-click the Engine.exe

- Click Properties

- Switch to the Details tab

- The File version will be listed

Qlik Cloud Analytics does not list versions as the cloud version is always identical and current across the platform.

-

Edits to Analytics Repository Settings in Qlik Enterprise Manager are not saved

Setting up or configuring the Analytics Repository in Qlik Enterprise Manager fails. Accessing the Analytics tab shows the message: Repository Connect... Show MoreSetting up or configuring the Analytics Repository in Qlik Enterprise Manager fails. Accessing the Analytics tab shows the message:

Repository Connection Settings – Before you can use Analytics, you need to configure the connection settings for the PostgreSQL repository. Open Repository Connection Settings.

The message continues to trigger in the Analytics tab, even after correctly configuring the connection. The Start Collection button in the Enterprise Manager Settings remains disabled.

Resolution

Recreate the JavaServerConfiguration entry in cfgrepo.SQLite.

This solution requires knowledge of a DB browser for SQLite and changes to a configuration file. Always back up your data before proceeding.

If you do not yet have access to a DB Browser for SQLite, one can be downloaded from https://sqlitebrowser.org/

- Stop the Qlik Enterprise Manager service

- With the SQLite Browser, open the file ...\Attunity\Enterprise Manager\data\cfgrepo.SQLite

- Open the Browse Data tab

- Select Objects in the Table drop-down

- Locate and delete the row named JavaServerConfiguration (Select the row and use the DEL key)

- Click Write Changes to save

- In Windows File Explorer, go to ...\Attunity\Enterprise Manager\data

- Rename the Java folder to Java_old

- Start the Qlik Enterprise Manager Service; a new JavaServerConfiguration entry and a new Java folder are created

Cause

Incorrect configuration in the Java settings inside the SQLite file.

Environment

- Qlik Replicate

-

Qlik Talend Cloud: Retrieving APIs from deleted users in API Designer

The older API user accounts, which are no longer accessible due to team members departing or their email addresses becoming inactive, are currently un... Show MoreThe older API user accounts, which are no longer accessible due to team members departing or their email addresses becoming inactive, are currently unavailable. Consequently, you are unable to manage or update these APIs, as the original owners are absent, and you lack the authority to edit and publish new versions.

For all newly defined APIs, you have the capability to directly assign ownership to the "Architects" group. However, regarding the existing APIs, you are unable to alter their ownership on your own.Resolution

Talend Cloud API Designer allows you to share APIs with other users and/or groups of users. For additional details, please refer to the sharing api documentation.

Note: In the Talend Cloud API Designer settings, it is possible to reassign API contracts previously created by deleted users to active connected users. This option is only available for users that have the Administrate API Designer permission enabled.

Environment

- Talend Cloud API Designer & Tester

-

ORA-12899: value too large for column

When replicating to Oracle target, in some cases you may get ORA-12899 error. This error may happen in a situation where your Oracle target has NLS_LE... Show MoreWhen replicating to Oracle target, in some cases you may get ORA-12899 error.

This error may happen in a situation where your Oracle target has NLS_LENGTH_SEMANTICS set to ‘byte’ and the column being replicated from the source endpoint to the Oracle target endpoint includes at Unicode character/s that is presented by more than one byte. In this case, for example, when you replicate a varchar (10), it will be created as varchar (10 byte). Therefore, if the replicated data in the source endpoint includes a Unicode character that in UTF8 is presented by more than one byte, it could be that although the source is only 10 characters, on target endpoint (where replicate works in utf8), it would require more than 10 bytes for presenting its value. This will result with ORA-12899 error.

In general, when working with Oracle, the NLS_LENGTH_SEMANTICS setting determines how the CHAR and VARCHAR2 columns will be created. i.e., it enables you to create CHAR and VARCHAR2 columns using either byte or character length semantics.

Environment

- Replicate with Oracle target endpoint

Resolution

- Stop the task

- Open the Oracle target endpoint, go to under Advanced tab-->Internal Parameters.

In the search box type charLengthSemantics and set it to CHAR. This will cause Replicate to create CHAR and VARCHAR2 columns using char semantics. For example: COL1 VARCHAR2(10 CHAR) instead of COL1 VARCHAR2(10 BYTES), and this will make sure that column length will be long enough to hold its value (including Unicode characters) and thus eliminate the ORA-12899 error.

Note:

- This internal property in Replicate charLengthSemantics determines whether to use the oracle default of NLS_LENGTH_SEMANTICS or override it.

- The NLS_LENGTH_SEMANTICS/charLengthSemantics setting affects any CHAR and VARCHAR2 columns in any table that Replicate creates in the target endpoint

-

How to enable Qlik Sense QIX performance logging and use the Telemetry Dashboard

With February 2018 of Qlik Sense, it is possible to capture granular usage metrics from the Qlik in-memory engine based on configurable thresholds. T... Show MoreWith February 2018 of Qlik Sense, it is possible to capture granular usage metrics from the Qlik in-memory engine based on configurable thresholds. This provides the ability to capture CPU and RAM utilization of individual chart objects, CPU and RAM utilization of reload tasks, and more.

Also see Telemetry logging for Qlik Sense Administrators in Qlik's Help site.

Click here for Video Transcript

Enable Telemetry LoggingIn the Qlik Sense Management Console, navigate to Engines > choose an engine > Logging > QIX Performance log level. Choose a value:

- Off: No logging will occur

- Error: Activity meeting the ‘error’ threshold will be logged

- Warning: Activity meeting the ‘error’ and ‘warning’ thresholds will be logged

- Info: All activity will be logged

Note that log levels Fatal and Debug are not applicable in this scenario.Also note that the Info log level should be used only during troubleshooting as it can produce very large log files. It is recommended during normal operations to use the Error or Warning settings.

- Repeat for each engine for which telemetry should be enabled.

Set Threshold Parameters

- Edit C:\ProgramData\Qlik\Sense\Engine\Settings.ini If the file does not exist, create it. You may need to open the file as an administrator to make changes.

- Set the values below. It is recommended to start these threshold values and increase or decrease them as you become more aware of how your particular environment performs. Too low of values will create very large log files.

[Settings 7]

ErrorPeakMemory=2147483648

WarningPeakMemory=1073741824

ErrorProcessTimeMs=60000

WarningProcessTimeMs=30000

- Save and close the file.

- Restart the Qlik Sense Engine Service Windows service.

- Repeat for each engine for which telemetry should be enabled.

Parameter Descriptions

- ErrorPeakMemory: Default 2147483648 bytes (2 Gb). If an engine operation requires more than this value of Peak Memory, a record is logged with log level ‘error’. Peak Memory is the maximum, transient amount of RAM an operation uses.

- WarningPeakMemory: Default 1073741824 bytes (1 Gb). If an engine operation requires more than this value of Peak Memory, a record is logged with log level ‘warning’. Peak Memory is the maximum, transient amount of RAM an operation uses.

- ErrorProcessTimeMs: Default 60000 millisecond (60 seconds). If an engine operation requires more than this value of process time, a record is logged with log level ‘error’. Process Time is the end-to-end clock time of a request.

- WarningProcessTimeMs: Default 30000 millisecond (30 seconds). If an engine operation requires more than this value of process time, a record is logged with log level ‘warning’. Process Time is the end-to-end clock time of a request.

Note that it is possible to track only process time or peak memory. It is not required to track both metrics. However, if you set ErrorPeakMemory, you must set WarningPeakMemory. If you set ErrorProcessTimeMs, you must set WarningProcessTimeMs.

Reading the logs

- To familiarize yourself with logging basics, please see help.qlik.com

Note: Currently telemetry is only written to log files. It does not yet leverage the centralized logging to database capabilities.

- Telemetry data is logged to C:\ProgramData\Qlik\Sense\Log\Engine\Trace\<hostname>_QixPerformance_Engine.txt

- and rolls to the ArchiveLog folder in your ServiceCluster share.

- In addition to the common fields found described), fields relevent to telemetry are:

- Level: The logging level threshold the engine operation met.

- ActiveUserId: The User ID of the user performing the operation.

- Method: The engine operation itself. See Important Engine Operations below for more.

- DocId: The ID of the Qlik application.

- ObjectId: For chart objects, the Object ID of chart object.

- PeakRAM: The maximum RAM an engine operation used.

- NetRAM: The net RAM an engine operation used. For hypercubes that support a chart object, the Net RAM is often lower than Peak RAM as temporary RAM can be used to perform set analysis, intermediate aggregations, and other calculations.

- ProcessTime: The end-to-end clock time for a request including internal engine operations to return the result.

- WorkTime: Effectively the same as ProcessTime excluding internal engine operations to return the result. Will report very slightly shorter time than ProcessTime.

- TraverseTime: Time spent running the inference engine (i.e, the green, white, and grey).

Note: for more info on the common fields found in the logs please see help.qlik.com.

Important Engine Operations

The Method column details each engine operation and are too numerous to completely detail. The most relevent methods to investigate are as follows and will be the most common methods that show up in the logs if a Warning or Error log entry is written.

Method Description Global::OpenApp Opening an application Doc::DoReload, Doc::DoReloadEx Reloading an application Doc::DoSave Saving an application GenericObject::GetLayout Calculating a hypercube (i.e., chart object)

Comments- For best overall representation of the time it takes for an operation to complete, use ProcessTime.

- About ERROR and WARNING log level designations: These designations were used because it conveniently fit into the existing logging and QMC frameworks. A row of telemetry information written out as an error or warning does not at all mean the engine had a warning or error condition that should require investigation or remedy unless you are interested in optimizing performance. It is simply a means of reporting on the thresholds set within the engine settings.ini file and it provides a means to log relevant information without generating overly verbose log files.

Qlik Telemetry Dashboard:

Once the logs mentioned above are created, the Telemetry Dashboard for Qlik Sense can be downloaded and installed to read the log files and analyze the information.

The Telemetry Dashboard provides the ability to capture CPU and RAM utilization of individual chart objects, CPU and RAM utilization of reload tasks, and more.For additional information including installation and demo videos see Telemetry Dashboard - Admin Playbook

The dashboard installer can be downloaded at: https://github.com/eapowertools/qs-telemetry-dashboard/wiki. NOTE: The dashboard itself is not supported by Qlik Support directly, see the Frequently Asked Questions about the dashboard, to report issues with the dashboard, do so on the "issues" webpage.

1. Right-click installer file and use "Run as Administrator". The files will be installed at C:\Program Files\Qlik\Sense

2. Once installed, you will see 2 new tasks, 2 data connections and 1 new app in the QMC.

3. In QMC Change the ownership of the application to yourself, or the user you want to open the app with.

4. Click on the 'Tasks' section in the QMC, click once on 'TelemetryDashboard-1-Generate-Metadata', then click 'Start' at the bottom. This task will run, and automatically reload the app upon completion.

5. Use the application from the hub to browse the information by sheets -

Replicate - How to store before-image data in target table

Sometimes we need to store column's before-image data in the target table. This is useful if we want to store both of the before-image and after-imag... Show MoreSometimes we need to store column's before-image data in the target table. This is useful if we want to store both of the before-image and after-image of the columns values in the target table for downstream apps usage.

Environment

- Qlik Replicate All supported versions

Detailed Steps:

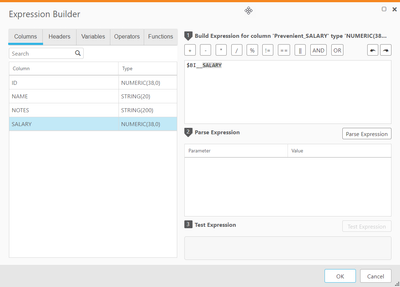

Under Apply Changes mode (Store changes mode is turn off), in the table setting by adding a new column in transformation (name it as "prevenient_salary" in this sample), the variable expression is like $BI__<columnName> where $BI__ is a mandatory prefix (which instructs Replicate to capture the before-image data) and <columnName> is the original table column. For example if the original table column name is SALARY then $BI__SALARY is the column before-image data:

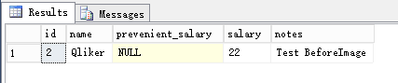

If the column SALARY value is updated from 22 to 33 in source side, then before the UPDATE the target table row looks like:

after the UPDATE is applied to target table the row looks like:

In this sample the before-image value is 22, the after-image value is 33.

Related Content

before-image data can be used in filer also, see sample here .

-

Exporting and importing Security rules using Qlik-Cli

Scenario: Have Qlik-Cli installed and configured (see How to install Qlik-CLI for Qlik Sense on Windows) for more insight on how to install and confi... Show MoreScenario:

- Have Qlik-Cli installed and configured (see How to install Qlik-CLI for Qlik Sense on Windows) for more insight on how to install and configure Qlik-Cli)

- Create a PowerShell script using the following code as a base:

! Note that the code is provided as is and will need to be modified to suit specific needs. For assistance, please contact the original creators of Qlik-Cli.

Connect-Qlik -computername "QlikSenseServer.company.com" # This will export the custom security rules and output them as secrules.json Get-QlikRule -filter "type eq 'Custom' and category eq 'security'" -full -raw | ConvertTo-Json | Out-File secrules.json # This will import secrules.json into a Sense site # The ideal use case would be to move the developedsecurity rules from one environment to another, so connecting to another Sense site would likely be needed here Get-Content -raw .\secrules.json |% {$_ -replace '.*"id":.*','' } | ConvertFrom-Json | Import-QlikObjectEnvironment:

Qlik Sense Enterprise on Windows , all versions

-

Shared Automations: How to identify the user running the Automation

With the release of shared automations in Qlik Automate, it is now possible to run an automation in a Shared Space for users other than the owner. The... Show MoreWith the release of shared automations in Qlik Automate, it is now possible to run an automation in a Shared Space for users other than the owner. The automation will run using the owner’s Qlik account and any third-party connections as they were configured by the owner.

When an automation is run by another user, it is possible to retrieve this user’s information during the automation run. This article outlines how this is done.

This is only supported when the automation is run from the console or API. It is not supported for triggered automations when they are run from the trigger URL or webhook automations when they are run from the webhook event. The reason for this is that in these cases, no user subject is sent to the automation (triggered executions use the execution token instead of a user token, and webhook automations have no user involved when they are run by the webhook event).

However, if triggered or webhook automations are run manually or over API, it will be possible to retrieve the user's info.Identifying the user who started the Automation

When an automation is run by any user, it is possible to retrieve the user id and user info for the user who executed the automation during the automation run.

- Use the Get Automation Run block (A) from the Qlik Cloud Services connector and configure it with the Automation ID and Automation Run ID (C) formulas from the formula picker (B).

- The Get Automation Run block will return the executedByID to identify who has started the automation:

- Configure the Get User block to use the executedById parameter as input for the User Id parameter:

- You can then use a Condition block to evaluate the returned value from the Get User block.

For example, you can check if the user is a part of a predefined list of users:

Environment

- Qlik Cloud Automate

- Qlik Cloud

- Use the Get Automation Run block (A) from the Qlik Cloud Services connector and configure it with the Automation ID and Automation Run ID (C) formulas from the formula picker (B).

-

Pattern Frequency Statistics for DB2 in Qlik Talend Data Profiling

Unable to use data profiling to achieve Pattern Frequency Statistic on DB2. Resolution As of R2025-06, the Pattern Frequency Statistic feature in Qli... Show MoreUnable to use data profiling to achieve Pattern Frequency Statistic on DB2.

Resolution

As of R2025-06, the Pattern Frequency Statistic feature in Qlik Talend Studio > Data Profiling is not supported for DB2 databases.

Workaround

Export the data in CSV format, define a file-delimited metadata type based on the CSV file, and then perform the profiling on that data.

For more information, see Pattern frequency statistics.

An active Idea has been logged to support this feature for DB2. To vote on the idea, log in to Ideation before going to #495364 Talend Studio Data Profiling (DQ) support db2 Pattern Frequency Statistics indicators.

Environment

- Qlik Talend Studio

-

Qlik Talend Snowflake Job is failing with error "Session no longer exists. New ...

A Talend Snowflake Job is failing at the t<DB>Connection component when acquiring the connection to execute sql. The following error looks like belo... Show MoreA Talend Snowflake Job is failing at the t<DB>Connection component when acquiring the connection to execute sql.

The following error looks like below:

Getting the "Session no longer exists. New login required to access the service."

Resolution

Comparing both Timeout settings within Talend Studio and Snowflake

- In Studio -->Navigate to the snowflake connection component > Advanced Settings > Login Timeout

- In Snowflake -->SHOW PARAMETERS LIKE '%timeout%';

Cause

The Snowflake or Talend Studio Snowflake component Login Timeout may have been set too low for what was required

Environment

- In Studio -->Navigate to the snowflake connection component > Advanced Settings > Login Timeout

-

Qlik Talend Administration Center: How to reset default credentials (security@co...

If unable to access TAC using default credential (security@company.com/admin), you have forgotten your password. You can reset it by following these s... Show MoreIf unable to access TAC using default credential (security@company.com/admin), you have forgotten your password.

You can reset it by following these steps:

- Establish a connection to the TAC database.

- In the TAC database, execute the following statement:

UPDATE 'user' set 'password'=0x21232F297A57A5A743894A0E4A801FC3 where id =<userID>;

Note that `0x21...` corresponds to the encrypted password for "admin". To identify the <userID> associated with the user whose password you wish to update, execute this query: select id, login from user; - Using the new password, log in to Talend Administration Center once again.

Related Content

Change the default password used to configure the TAC database

Environment

-

Qlik Talend Remote Engine Fails to Start via Systemd – Exit Status 126

The Talend Remote Engine Service fails to start, and systemd commands do not work as expected, resulting in the following error messages in the system... Show MoreThe Talend Remote Engine Service fails to start, and systemd commands do not work as expected, resulting in the following error messages in the system logs:

Subject: Unit process exited

Defined-By: systemd

Support: https://access.redhat.com/support

An ExecStart= process belonging to unit talend-remote-engine.service has exited.

The process' exit code is 'exited' and its exit status is 126.

Jun 19 14:10:13 cmips-etl-ate-talend systemd[1]: talend-remote-engine.service: Failed with result 'exit-code'.

Subject: Unit failed

Defined-By: systemd

Support: https://access.redhat.com/support

The unit talend-remote-engine.service has entered the 'failed' state with result 'exit-code'.

Jun 19 14:10:13 cmips-etl-ate-talend systemd[1]: Failed to start karaf.

Subject: A start job for unit talend-remote-engine.service has failed

Defined-By: systemd

Support: https://access.redhat.com/support

A start job for unit talend-remote-engine.service has finished with a failure.

The job identifier is [Job Number] and the job result is failed.

Jun 19 14:17:50 cmips-etl-ate-talend systemd[1]: Starting karaf...

Subject: A start job for unit talend-remote-engine.service has begun execution

Defined-By: systemd

Support: https://access.redhat.com/support

A start job for unit talend-remote-engine.service has begun execution.

Resolution

Solution for A

- Test Manual Startup

To isolate whether the issue is with the service wrapper or the engine itself, try starting the engine manually:cd /path/to/Talend-RemoteEngine/bin

If the engine starts successfully, the problem is with the systemd wrapper or permissions, not the engine itself. - Check and Correct File Permissions

Ensure that the service wrapper scripts (e.g., trun.sh, talend-remote-engine-wrapper, or talend-remote-engine-service) are executable by the user running the service. For example:chmod +x /path/to/Talend-RemoteEngine/bin/trun.sh

chmod +x /path/to/Talend-RemoteEngine/bin/talend-remote-engine-wrapper

chmod +x /path/to/Talend-RemoteEngine/bin/talend-remote-engine-serviceAlso, verify that the files are readable by the intended user and, if necessary, by the systemd process.

Solution for B

- Address SELinux Restrictions

By default, SELinux may block execution of the Talend Remote Engine service wrapper, resulting in permission errors even if file permissions are correct. - Temporarily disable SELinux

To confirm if it is the source of the problem:sudo setenforce 0

Then, retry starting the service. If the service starts successfully, SELinux is the cause. - Permanently disable SELinux (if your security policy allows):

Edit /etc/selinux/config and set:SELINUX=disabled

Reboot the server for the change to take effect. - Alternatively, set SELinux to permissive mode:

Edit /etc/selinux/config and set:SELINUX=permissive

Reboot the server for the change to take effect.

Disabling SELinux reduces system security. Consider creating a custom SELinux policy to allow execution if you must keep SELinux enabled.

Cause

Exit status 126 in systemd indicates that the command was found but is not executable. This typically points to a permission issue on the script or binary that systemd is attempting to launch.

In this context of Talend Remote Engine, this is often caused by:

Cause A

Incorrect file permissions on the service wrapper scripts or binaries.

Cause B

SELinux policies prevents execution of the wrapper script or service file.

Environment

- Test Manual Startup