Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Phone Numbers

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

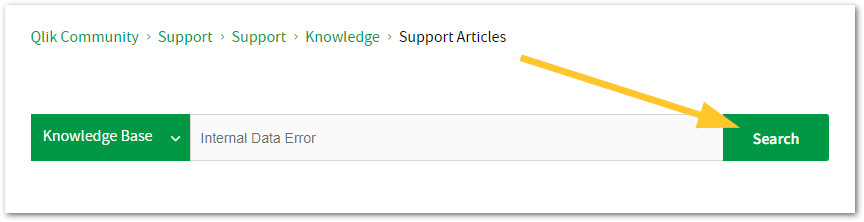

Looking for content? Type your question into our global search bar:

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

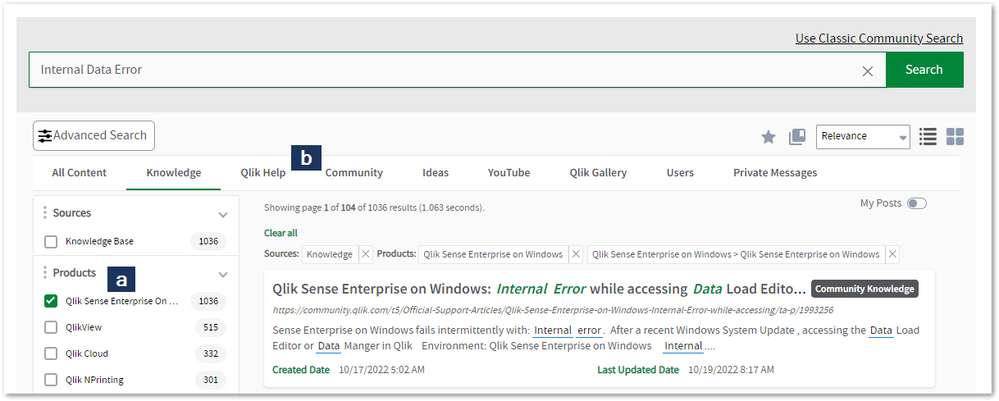

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to register.myqlik.qlik.com

If you already have an account, please see How To Reset The Password of a Qlik Account for help using your existing account. - You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

Log in to manage and track your active cases in the Case Portal. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Phone Numbers

When other Support Channels are down for maintenance, please contact us via phone for high severity production-down concerns.

- Qlik Data Analytics: 1-877-754-5843

- Qlik Data Integration: 1-781-730-4060

- Talend AMER Region: 1-800-810-3065

- Talend UK Region: 44-800-098-8473

- Talend APAC Region: 65-800-492-2269

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

Binary load fails with General Script Error when using app binary instead of .qv...

A binary load command that refers to the app ID (example Binary[idapp];) does not work and fails with: General Script Error or Binary load fails with ... Show MoreA binary load command that refers to the app ID (example Binary[idapp];) does not work and fails with:

General Script Error

or

Binary load fails with error Cannot open file

This is observed after upgrading to Qlik Sense Enterprise on Windows November 2024 Patch 8, and any later releases.

It was previously possible to do binary loads from app binaries without the .qvf file extension (GUID number file name). With recent releases, Qlik is enforcing an already existing restriction, meaning the QVF file extension is now required. See Binary Load and Limitations for details.

This is working as designed and is intended as a security measure.

Example of a valid binary load:

LIB CONNECT TO 'DataFiles';

LOAD * FROM [YourFile.qvd];Example of an invalid binary load:

"Binary [lib://Apps/777a0a66-555x-8888-xx7e-64442fa4xxx44];"Environment

- Qlik Sense Enterprise on Windows November 2024 Patch 8 and any later releases

-

Talend Studio: How to update column properties when using a dynamic schema

In many cases, when using a dynamic schema, it's necessary to change the properties of a column to map the target data structure. The column propertie... Show MoreIn many cases, when using a dynamic schema, it's necessary to change the properties of a column to map the target data structure. The column properties include column name, value, type, etc.

Example Job

The following image shows an example of how to get the column properties on a tJavaRow component and update them.

The example Java code below changes the column name of column 'name' to a new name, changes the data type of column 'age' to Integer. Copy this code and modify it according to specific needs, it supports changing column name, data type, length, precision, etc.Dynamic columns = input_row.data; for (int i = 0; i < columns.getColumnCount(); i++) { DynamicMetadata columnMetadata = columns.getColumnMetadata(i); String col_name=columnMetadata.getName(); String col_type=columnMetadata.getType(); if(col_name.equals("name")){ columnMetadata.setName("new_name"); } if(col_name.equals("age")){ columnMetadata.setType("id_Integer"); } } output_row.data=columns;Note: Talend Support does not support custom code development or debugging.

Environment

Talend Data Integration 8.0.1

-

Qlik Stitch: Data Loading Error "The data for this table would result in more th...

You may be experiencing the following data loading error when connecting the HubSpot Integration to a destination: "The data for this table would resu... Show MoreYou may be experiencing the following data loading error when connecting the HubSpot Integration to a destination:

"The data for this table would result in more than 1,600 columns"

Resolution

To resolve this issue and bring your integration back online, follow the steps below. This process applies to any affected table (for example, contacts, deals, companies tables)

- Pause the Integration

Start by pausing the HubSpot integration in Stitch to prevent new data from syncing during the fix. - Update Field Selection

- Navigate to the affected table (for example: contacts, deals) in the Stitch UI.

- Deselect the main

propertiesfield. - Manually select only the individual fields you need — these will be listed as

properties_<field_name>

- Drop the Affected Table in the destination( Redshift as an example)

In your database, drop the oversized table to allow Stitch to recreate it with the updated schema.

- Reset the Table in Stitch

- Go to Tables to Replicate > [table_name].

- Click on Table Settings.

- Choose Reset Table, and confirm the prompt.

This will trigger a rebuild of the table with the selected fields only.

- Unpause the Integration

Once everything is updated, unpause the integration to resume syncing.

Cause

This error message indicates that the data being replicated into your destination exceeds the 1600-column limit during loading imposed by the warehouse (e.g, Redshift) and it most commonly arises when Stitch attempts to de-nest complex fields like properties from HubSpot tables, such as: contacts, deals,companies

These

propertiesfields act as containers for a large number of individual fields. When de-nested, they can generate a lot of columns, especially if:-

The top-level

propertiesfield is selected, and -

Individual

properties_<field_name>fields are also selected

Even if you deselect some individual fields, selecting the main

propertiesfield overrides those exclusions, and all properties are still replicated. This can push your table schema past the 1600-column limit.Environment

- Pause the Integration

-

Qlik Stitch Q & A: Community Supported Integrations

Question I What is Community Supported Integrations in Stitch? Community Integrations are developed by a member of the Singer open source community an... Show MoreQuestion I

What is Community Supported Integrations in Stitch?

Community Integrations are developed by a member of the Singer open source community and the code to run it is publicly available.

While Singer Community Integrations can be run within Stitch, we does not actively support them. The Singer Community will maintain and Support Community integrations. This includes fixing bugs and adapting to new versions of third-party APIs.

Question II

How to Request Support for Community Supported Integration?

To request support for this community-supported integrations regarding any issues, you have a few options forward, and they are outlined below:

- You can open an “issue” on the integration repo

- Reach out to the Singer Community via the Singer Slack group. Provide relevant Log lines from the Extraction Logs page within Stitch and a brief summary of the behavior, as well as any additional context they have surrounding its occurrence will be helpful for any community members who move to address the issue, and our Engineering team also periodically review issues and pull requests opened on Singer repositories.

- Contribute to the code yourself by creating a pull request. With all of that mentioned, since the error is due to a forbidden connection, you should make sure that nothing related to permissions has changed, and try to re-authorise the integration and then run a new job. You should also try generating a new API Token here:

https://www.stitchdata.com/docs/integrations/saas/shiphero#setup Singer | Open Source ETLSimple, Composable, Open Source ETL

This information is only related to the community-supported integrations.

Environment

-

Qlik Replicate: Errors Due to Unsupported SQL Server Versions

Starting from Qlik Replicate versions 2024.5 and 2024.11, Microsoft SQL Server 2012 and 2014 are no longer supported. Supported SQL Server versions in... Show MoreStarting from Qlik Replicate versions 2024.5 and 2024.11, Microsoft SQL Server 2012 and 2014 are no longer supported. Supported SQL Server versions include 2016, 2017, 2019, and 2022. For up-to-date information, see Support Source Endpoints for your respective version.

Attempting to connect to unsupported versions, both on-premise and cloud, can result in various errors.

Examples of reported Errors:

- Qlik Replicate 2024.5 accessing Microsoft Azure SQL Server 2014

[SOURCE_CAPTURE ]W: Table 'dbo'.'tableName' has encrypted column(s), but the 'Capture data from Always Encrypted database' option is disabled. The table will be suspended (sqlserver_endpoint_capture.c:157) - Qlik Replicate 2024.11 accessing Microsoft SQL Server 2014

[SOURCE_UNLOAD ]E: RetCode: SQL_ERROR SqlState: 42S02 NativeError: 208 Message: [Microsoft][ODBC Driver 18 for SQL Server][SQL Server]Invalid object name 'sys.column_encryption_keys'. Line: 1 Column: -1 [1022502] (ar_odbc_stmt.c:4067)

Cause

The system view sys.column_encryption_keys is only available starting from SQL Server 2016. Attempting to query this view on earlier versions results in errors.

Reference: sys.column_encryption_keys (Microsoft Docs)

Resolution

Upgrade your SQL Server instances to a supported version (2016 or later) to ensure compatibility with Qlik Replicate 2024.5 and above.

Internal Investigation ID(s)

00375940, 00376089

Environment

- Qlik Replicate versions 2024.5, 2024.11, and higher

- Microsoft SQL Server 2014, 2012, and lower (unsupported)

- Qlik Replicate 2024.5 accessing Microsoft Azure SQL Server 2014

-

Import or Export of Extensions fails on Qlik Sense Enterprise on Windows

Adding an extension in the Qlik Sense Enterprise Management Console fails with error can't add extension. The extension is otherwise valid and can be... Show More -

Unable to connect to Azure SQL database using Microsoft Entra MFA: Connection br...

Setting up a Qlik Cloud Analytics connection to an Azure SQL database with Microsoft Entra MFA enabled fails at the connection test with: Error messag... Show MoreSetting up a Qlik Cloud Analytics connection to an Azure SQL database with Microsoft Entra MFA enabled fails at the connection test with:

Error message: Please check the value for Username, Password, Host and other properties.

Description: Communication link failureERROR [08S01][Qlik][SqlServer] Connection broken unexpectedly.

Resolution

Create an Azure SQL database connection using a supported authentication method.

See Authenticating the driver.

Microsoft Entra MFA is not supported.

Environment

- Qlik Cloud Analytics

-

Qlik NPrinting: License information was removed by the restore procedure

After otherwise successfully restoring a Qlik NPrinting backup, the license information remains blank. This information is expected to be restored as ... Show MoreAfter otherwise successfully restoring a Qlik NPrinting backup, the license information remains blank. This information is expected to be restored as well.

Resolution

This has been addressed as defect QCB-31631.

Workaround:

Activate the Qlik NPrinting license manually.

Fix Version:

Qlik NPrinting February 2024 SR5 and later releases. See the Release Notes for details.

Environment

- Qlik NPrinting May 2023 SR6 and SR7

- Qlik NPrinting February 2024 SR3 and SR4

Information provided on this defect is given as is at the time of documenting. For up-to-date information, please review the most recent Release Notes or contact support with the ID QCB-31631 for reference.

-

Qlik Talend Studio: How to find all Jobs that rely on deprecated components for ...

Due to tHttpRequest, tFileFetch and tRest components have been removed from Talend Studio 8.0 R2025-04 onwards in favor of tHTTPClient, some adjustmen... Show MoreDue to tHttpRequest, tFileFetch and tRest components have been removed from Talend Studio 8.0 R2025-04 onwards in favor of tHTTPClient, some adjustments are required to transition to tHTTPClient if your current Talend jobs actively use the deprecated tHttpRequest/tFileFetch/tREST/component.

This article briefly introduces how to find all jobs rely on the deprecated components for replacement purpose.

How to

- Create a dummy job or find a Job using the related deprecated components

- Right click on the component and choose " Find Component in Jobs" item from List and it will search all jobs that use this component.

SearchComponentUsedinJobs

Internal Investigation ID(s)

QTDI-783 - Deprecate tREST component in favor of tHTTPClient

QTDI-784 - Deprecate tFileFetch component in favor of tHTTPClient

QTDI-785 - Deprecate tHTTPRequest component in favor of tHTTPClientEnvironment

-

Qlik Predict 500 Error in Dashboards: Operation was rejected because the system ...

Visualizations calling data points within a Qlik App from Qlik Predict (previously AutoML) produce the following error: Error: Operation was rejected ... Show MoreVisualizations calling data points within a Qlik App from Qlik Predict (previously AutoML) produce the following error:

Error: Operation was rejected because the system is not in a state required for the operation's execution.

An additional error seen may be:

"grpc::StatusCode::FAILED_PRECONDITION: 'Error returned by endpoint: InternalServerError Internal Server Error'".

Resolution

Retrain the model with the same training data last used to train the Qlik Predict, then reload the app.

Cause

Qlik has recently performed back-end changes that may impact a small number of tenants which have not had their Qlik Predict models retrained since March or April 2025.

Internal Investigation ID(s)

SUPPORT-1294

Environment

- Qlik Predict

-

Qlik Sense: How to make a virtual proxy else than Central Proxy the default

The central proxy virtual proxy cannot be deleted and virtual proxy prefix are unique per proxy. Environments: Qlik Sense Enterprise on Windows ... Show MoreThe central proxy virtual proxy cannot be deleted and virtual proxy prefix are unique per proxy.

Environments:

Resolution:

In order to change to remove the prefix for a different virtual proxy to use another authentication method by default:- in the QMC, go to "Virtual proxies" > "Central proxy (default)"

- Modify the virtual proxy prefix to the desired string

- Click "Apply" in order to save

- Go back to "Virtual proxies", double-click on the virtual proxy that you want to make default in order to edit it.

- Remove the virtual proxy prefix

- Click "Apply" in order to save

Note: If the virtual proxy made default is using SAML authentication, the SAML Assertion Consumer Service URL will also need to be updated in the Identity Provider configuration. -

Qlik Sense ODBC connection fails with error System error: The handle is invalid ...

Some data connections (such as the Microsoft SQL connector in the ODBC Connector package) may stop working after the Qlik Sense on-premise service acc... Show MoreSome data connections (such as the Microsoft SQL connector in the ODBC Connector package) may stop working after the Qlik Sense on-premise service account password is changed.

The following error is shown:

LIB CONNECT TO [Connection-Name]

Error: Connection aborted (System error: The handle is invalid.)

Execution Failed

Execution finished.This happens even if the user ID for the connection itself is not the same as the one used for the service account.

Resolution

Re-created encryption keys. For details, see Solution 3 in Qlik Sense Data Connectors are missing.

Environment

- Qlik Sense Enterprise on Windows

-

Unable to open an app sheet using HTTP in Qlik Sense Enterprise on Windows May 2...

Accessing a Qlik Sense app sheet using HTTP fails. A blank, white screen is displayed. HTTPS is not affected. Resolution This has been identified as... Show MoreAccessing a Qlik Sense app sheet using HTTP fails. A blank, white screen is displayed.

HTTPS is not affected.

Resolution

This has been identified as defect QCB-32130 and affects Qlik Sense Enterprise on Windows May 2025 IR.

Workaround:

Use HTTPS.

Fix Version:

Qlik Sense Enterprise on Windows May 2025 Service Patch 1 and any higher releases.

Cause

Product Defect ID: QCB-32130

Environment

- Qlik Sense Enterprise on Windows May 2025 IR

Information provided on this defect is given as is at the time of documenting. For up-to-date information, please review the most recent Release Notes or contact support with the ID QCB-32130 for reference.

-

Introducing Automation Sharing and Collaboration

This capability is being rolled out across regions over time: May 5: India, Japan, Middle East, Sweden TBD: Asia Pacific, Germany, United Kingdom, Si... Show MoreThis capability is being rolled out across regions over time:

- May 5: India, Japan, Middle East, Sweden

- TBD: Asia Pacific, Germany, United Kingdom, Singapore

- TBD: United States

- TBD: Europe

- TBD: Qlik Cloud Government

[Update: May 7] The previously-scheduled rollouts of Automation Sharing and Collaboration for some regions have been temporarily postponed. We are working on an updated release plan and updated release dates are soon to be determined (TBD). Thank you for your understanding.

With the introduction of shared automations, it will be possible to create, run, and manage automations in shared spaces.

Content

- Allow other users to run an automation

- Collaborate on existing automations

- Collaborate through duplication

- Extended context menus

- Context menu for owners:

- Context menu for non-owners:

- Monitoring

- Administration Center

- Activity Center

- Run history details

- Metrics

Allow other users to run an automation

Limit the execution of an automation to specific users.

Every automation has an owner. When an automation runs, it will always run using the automation connections configured by the owner. Any Qlik connectors that are used will use the owner's Qlik account. This guarantees that the execution happens as the owner intended it to happen.

The user who created the run, along with the automation's owner at run time, are both logged in the automation run history.

These are five options on how to run an automation:

- Run an automation from the Hub and Catalog

- Run an automation from the Automations activity center

- Run an automation through a button in an app

You can now allow other users to run an automation through the Button object in an app without needing the automation to be configured in Triggered run mode. This allows you to limit the users who can execute the automation to members of the automation's space.

More information about using the Button object in an app to trigger automation can be found in How to run an automation with custom parameters through the Qlik Sense button. - Programmatic executions of an automation

- Automations API: Members of a shared space will be able to run the automations over the /runs endpoint if they have sufficient permissions.

- Run Automation and Call Automation blocks

- Note for triggered automations: the user who creates the run is not logged as no user specific information is used to start the run. The authentication to run a triggered automation depends on the Execution Token only.

Collaborate on existing automations

Collaborate on an automation through duplication.

Automations are used to orchestrate various tasks; from Qlik use cases like reload task chaining, app versioning, or tenant management, to action-oriented use cases like updating opportunities in your CRM, managing supply chain operations, or managing warehouse inventories.

Collaborate through duplication

To prevent users from editing these live automations, we're putting forward a collaborate through duplication approach. This makes it impossible for non-owners to change an automation that can negatively impact operations.

When a user duplicates an existing automation, they will become the owner of the duplicate. This means the new owner's Qlik account will be used for any Qlik connectors, so they must have sufficient permissions to access the resources used by the automation. They will also need permissions to use the automation connections required in any third-party blocks.

Automations can be duplicated through the context menu:

As it is not possible to display a preview of the automation blocks before duplication, please use the automation's description to provide a clear summary of the purpose of the automation:

Extended context menus

With this new delivery, we have also added new options in the automation context menu:- Start a run from the context menu in the hub

- Duplicate automation

- Move automation to shared space

- Edit details (owners only)

- Open in new tab (owners only)

Context menu for owners:

Context menu for non-owners:

Monitoring

The Automations Activity Centers have been expanded with information about the space in which an automation lives. The Run page now also tracks which user created a run.

Note: Triggered automation runs will be displayed as if the owner created them.

Administration Center

The Automations view in Administration Center now includes the Space field and filter.

The Runs view in Administration Center now includes the Executed by and Space at runtime fields and filters.

Activity Center

The Automations view in Automations Activity Center now includes Space field and filter.

Note: Users can configure which columns are displayed here.

The Runs view in the Automations Activity Center now includes the Space at runtime, Executed by, and Owner fields and filters.

In this view, you can see all runs from automations you own as well as runs executed by other users. You can also see runs of other users's automations where you are the executor.

Run history details

To see the full details of an automation run, go to Run History through the automation's context menu. This is also accessible to non-owners with sufficient permissions in the space.

The run history view will show the automation's runs across users, and the user who created the run is indicated by the Executed by field.

Metrics

The metrics tab in the automations activity center has been deprecated in favor of the automations usage app which gives a more detailed view of automation consumption.

-

Qlik Sense Enterprise on Windows: PostgresSQL consuming 100% of CPU in two-hourl...

The PostgreSQL instance used by Qlik Sense (on-premise) uses 100% of CPU capacity. This behavior can be seen in an interval of 2 hours. Cause The Reso... Show MoreThe PostgreSQL instance used by Qlik Sense (on-premise) uses 100% of CPU capacity. This behavior can be seen in an interval of 2 hours.

Cause

The Resource Distribution Service is set to run a 2-hourly scan on all extensions and themes as part of a sync to Qlik Sense Cloud. This has proven to have a dramatic effect in very large environments where postgres.exe is causing the CPU to spike to 100% for an extended duration, making the environment unusable.

Resolution

Switch off Resource Distribution or set it to run every 24 hours.

Switch off Resource Distribution

- Open the services.conf file (default: C:\Program Files\Qlik\Sense\ServiceDispatcher\services.conf) in a text editor elevated to Administrator permissions

- Locate the [resource-distribution] section.

- Set Disabled to true:

[resource-distribution] Disabled=true Identity=Qlik.resource-distribution DisplayName=Resource Distribution ExePath=Node\node.exe Script=..\ResourceDistributionService\server.js

- Save the file

- Restart the Service Dispatcher

Schedule it to run every 24 hours

- Open the services.conf file (default: C:\Program Files\Qlik\Sense\ServiceDispatcher\services.conf) in a text editor elevated to Administrator permissions

- Locate the [resource-distribution] section.

- Set Disabled to false as //Disabled=true:

[resource-distribution] //Disabled=true Identity=Qlik.resource-distribution DisplayName=Resource Distribution ExePath=Node\node.exe Script=..\ResourceDistributionService\server.js [resource-distribution.parameters] --secure --wes-port=${WESPort} --mode=server --log-path=${LogPath} --log-level=info --interval-time=86400000 - Save the file

- Restart the Service Dispatcher

Internal Investigation ID(s)

QB-18723

Environment

Qlik Sense Enterprise on Windows (November 2022 and later)

-

How to create a Qlik Oracle Wallet ODBC connection via the QRS Rest API

This article documents how to successfully implement an Oracle Wallet into a POST call, which is a necessary step when setting up a Qlik Oracle ODBC c... Show MoreThis article documents how to successfully implement an Oracle Wallet into a POST call, which is a necessary step when setting up a Qlik Oracle ODBC connection using the Wallet authentication method via the Qlik QRS API (post /dataconnection).

You can use the following approach to achieve this.

The first three steps will show you what information you need for the POST call.

- Create a manual Qlik Oracle connection via Wallet authentication in the Qlik Data Load Editor

- Get the connection details in the form of a JSON via the following QRS call:

get /dataconnection/{id}For details, review get /dataconnection/{id}

In our example, the data connection ID is 67069b0e-ef40-4873-91a8-8a9c56d61ebd (locate the ID in the Qlik Sense Management Console Data Connections section).

To retrieve all necessary information for the POST call use the following PowerShell script, which will query the repository using the user "sa_repository" and domain "internal".# Ignore SSL validation errors add-type @" using System.Net; using System.Security.Cryptography.X509Certificates; public class TrustAllCertsPolicy : ICertificatePolicy { public bool CheckValidationResult( ServicePoint srvPoint, X509Certificate certificate, WebRequest request, int certificateProblem) { return true; } } "@ [System.Net.ServicePointManager]::CertificatePolicy = New-Object TrustAllCertsPolicy # Force TLS 1.2 [Net.ServicePointManager]::SecurityProtocol = [Net.SecurityProtocolType]::Tls12 # Headers and cert $hdrs = @{ "X-Qlik-xrfkey" = "12345678qwertyui" "X-Qlik-User" = "UserDirectory=internal;UserId=sa_repository" } $cert = Get-ChildItem -Path "Cert:\CurrentUser\My" | Where-Object { $_.Subject -like '*QlikClient*' } if (-not $cert) { Write-Error "Qlik client certificate not found!" exit } # API URL $url = "https://localhost:4242/qrs/dataconnection/67069b0e-ef40-4873-91a8-8a9c56d61ebd?xrfkey=12345678qwertyui" # Make request try { $resp = Invoke-RestMethod -Uri $url -Method Get -Headers $hdrs -Certificate $cert $resp | ConvertTo-Json -Depth 10 } catch { Write-Error "API call failed: $_" } - The result of step two is a JSON structure that should look similar to this example:

{ "id": "67069b0e-ef40-4873-91a8-8a9c56d61ebd", "createdDate": "2024-07-08T10:15:25.144Z", "modifiedDate": "2025-04-25T09:34:56.948Z", "modifiedByUserName": "DOMAIN\\administrator", "customProperties": [], "owner": { "id": "0e756718-ddfa-457a-a219-256211c8dcb4", "userId": "administrator", "userDirectory": "DOMAIN", "userDirectoryConnectorName": "DOMAIN", "name": "Administrator", "privileges": null }, "name": "Oracle_TEST (domain_administrator)", "connectionstring": "CUSTOM CONNECT TO \"provider=QvOdbcConnectorPackage.exe;driver=oracle;ConnectionType=wallet;port=1521;USETNS=false;TnsName=zx0ka5pcjb3oxzut_high;EnableNcharSupport=1;allowNonSelectQueries=false;QueryTimeout=30;useBulkReader=true;maxStringLength=4096;logSQLStatements=false;\"", "type": "QvOdbcConnectorPackage.exe", "engineObjectId": "c4a089ad-b853-4841-b96c-79d259fba388", "username": "ADMIN", "password": "Wallet%2wallet.zip%%2UEsDBF...", // truncated for readability "logOn": 0, "architecture": 0, "tags": [], "privileges": null, "schemaPath": "DataConnection" }

Note "ConnectionType=wallet" in the connection string and "password": "Wallet%2wallet.zip%%2UEsDBF..." This contains the encoded information from the Oracle Wallet. -

Now apply all information from steps two and three on the template for the post /dataconnection request. See post /dataconnection for details. This allows you to create the Oracle ODBC connection via an API call (using the same Oracle Wallet).

The JSON could look something like this:

{ "name": "Oracle_TEST123", "connectionstring": "CUSTOM CONNECT TO \"provider=QvOdbcConnectorPackage.exe;driver=oracle;ConnectionType=wallet;port=1521;USETNS=false;TnsName=zx0ka5pcjb3oxzut_high;EnableNcharSupport=1;allowNonSelectQueries=false;QueryTimeout=30;useBulkReader=true;maxStringLength=4096;logSQLStatements=false;\"", "type": "QvOdbcConnectorPackage.exe", "username": "ADMIN", "password": "Wallet%2wallet.zip%%2UEsDBF...", // truncated for readability "logOn": 0, "architecture": 0, "schemaPath": "DataConnection", "tags": [], "customProperties": [], "owner": { "id": "0e756718-ddfa-457a-a219-256211c8dcb4", "userId": "administrator", "userDirectory": "DOMAIN", "userDirectoryConnectorName": "DOMAIN", "name": "Administrator" } }

PowerShell script example:$hdrs = @{ "X-Qlik-xrfkey" = "12345678qwertyui" "X-Qlik-User" = "UserDirectory=internal;UserId=sa_repository" "Content-Type" = "application/json" } $cert = Get-ChildItem -Path "Cert:\CurrentUser\My" | Where-Object { $_.Subject -like '*QlikClient*' } $body = @{ name = "Oracle_TEST123" connectionstring = 'CUSTOM CONNECT TO "provider=QvOdbcConnectorPackage.exe;driver=oracle;ConnectionType=wallet;port=1521;USETNS=false;TnsName=zx0ka5pcjb3oxzut_high;EnableNcharSupport=1;allowNonSelectQueries=false;QueryTimeout=30;useBulkReader=true;maxStringLength=4096;logSQLStatements=false;"' type = "QvOdbcConnectorPackage.exe" username = "ADMIN" password = "Wallet%2wallet.zip%%2UEsDBF..." # truncated logOn = 0 architecture = 0 schemaPath = "DataConnection" tags = @() customProperties = @() owner = @{ id = "0e756718-ddfa-457a-a219-256211c8dcb4" userId = "administrator" userDirectory = "DOMAIN" userDirectoryConnectorName = "DOMAIN" name = "Administrator" } } $jsonBody = $body | ConvertTo-Json -Depth 10 $url = "https://localhost:4242/qrs/dataconnection?xrfkey=12345678qwertyui" try { $response = Invoke-RestMethod -Uri $url -Method Post -Headers $hdrs -Certificate $cert -Body $jsonBody $response | ConvertTo-Json -Depth 10 } catch { Write-Error "POST failed: $_" }

You now have everything you need to create a new Oracle connection (Oracle_TEST123 as named in our example) using the post /dataconnection QRS REST API call.

Environment

- Qlik ODBC Connector Package

This article is provided as is. For additional assistance, post your query in our Integration and API forum or contact Services for customized solution assistance.

- Create a manual Qlik Oracle connection via Wallet authentication in the Qlik Data Load Editor

-

Qlik Stitch: How to Upgrade the Integration to Latest Version with Same Destinat...

As the deprecated and sunset integrations are not eligible for support, if the integration is using a deprecated version of the tap, you will need to ... Show MoreAs the deprecated and sunset integrations are not eligible for support, if the integration is using a deprecated version of the tap, you will need to upgrade the integration to the latest version. This article briefly introduces How to Upgrade the integration to Latest Version with Same Destination Schema.

How to Upgrade to the New Version

If don't mind changing the schema name, you can do so by simply creating a new integration name integration and deleting the old one.

Creating a new integration with a different schema name is recommended.

How to Upgrade to the New Version with Same Destination Schema

If you prefer to re-using the same destination schema name, please follow up below steps:

- Pause the existing integration from their Stitch account.

- Wait for any records that are being prepared for loading to be loaded.

- Take note of the tables and fields tracked for replication, as well as the replication methods defined for these tables.

- Delete the existing integration from your Stitch account.

- Drop the schema from your destination data warehouse (or rename it if you'd like to continue working from this data as Stitch completes a new historical replication).

- Contact Qlik Stitch Support to co-ordinate a manual row-usage exemption for this connection.

- Configure a new integration with the same name, and Stitch will create this schema in your destination during the loading process.

Related Content

Environment

-

Qlik Alerting Data Alerts do not trigger Alert mails when set to Previous Scans

Creating an alert with a Previous condition succeeds with: No alert email is delivered. The Qlik alerting log (C:\ProgramData\QlikAlerting\condition)... Show MoreCreating an alert with a Previous condition succeeds with:

No alert email is delivered.

The Qlik alerting log (C:\ProgramData\QlikAlerting\condition) records:

INFO history for condition 0 alertId 0699511f-081c-4466-b949-3da55123b617 user 83c0879f-3a4b-4073-bbd4-8617b80a048f

ERROR History not foundA similar message can be found in the worker log (C:\ProgramData\QlikAlerting\worker😞

WARN 0699511f-081c-4466-b949-3da55123b617 error receiving condition

WARN Error: Inactive

Resolution

- Open an Alert

- Go to Condition

- Set Manual value and run the alert once

- Return to set Previous scans; a history will now be available

Cause

No history exists for Previous Scans.

For more information on Calculated Conditions and previous history, see Calculate conditions.

Environment

- Qlik Alerting

-

Qlik Talend Product: How to set up Key Pair Authentication for Snowflake in Tale...

This guide briefly offers a step-by-step process on how to set up key-pair authentication for Snowflake in Talend Studio at Job level The process can ... Show MoreThis guide briefly offers a step-by-step process on how to set up key-pair authentication for Snowflake in Talend Studio at Job level

The process can be summarized in three steps:

- Creating the .p12 file with Open SSL

- Configuring Snowflake

- Configuring Talend Studio at Job Level

Creating the .p12 File with Open SSL

The .p12 file contains both the private and public keys, along with the owner's details (such as name, email address, etc.), all certified by a trusted third party. With this certificate, a user can authenticate and identify themselves to any organization that recognizes the third-party certification.

Talend tSetKeyStore component itself can only take in .jks or .p12/.pfx format. If you are using PKCS8 format, you need to convert your p8 certs into a supported format.

-

Generate the key with the following command line prompt:

openssl genpkey -algorithm RSA -out private.key -aes256

This will generate a private key (private.key) using the RSA algorithm with AES-256 encryption. You'll be prompted to enter a passphrase to protect the private key. - Generate a self-signed certificate using the following command line prompt:

openssl req -new -x509 -key private.key -out certificate.crt -days 1825

This command generates a self-signed certificate (certificate.crt) that is valid for 5 years. You will be prompted to enter details like country, state, and organization when generating the certificate. - Once you have both the private key (private.key) and certificate (certificate.crt), please create the .p12 file using the following command line and name your key alias.

openssl pkcs12 -export -out keystore.p12 -inkey private.key -in certificate.crt -name "abe"

And check the created .p12 file information with below command:

openssl pkcs12 -info -in keystore.p12 or keytool -v -list -keystore keystore.p12

- Generate a public key with the following command line:

openssl x509 -pubkey -noout -in certificate.crt > public.key

Configuring Snowflake :

The

USERADMINrole is required to perform the Snowflake configuration. Open your Snowflake environment and ensure you have a worksheet or query editor ready to execute the following SQL statements. .- For this step, you will create the necessary Snowflake components—database, warehouse, user, and role for testing purposes. If you already have an existing setup or example, feel free to re-use it

-- Drop existing objects if they exist DROP DATABASE IF EXISTS ABE_TALEND_DB; -- Drop the test database DROP WAREHOUSE IF EXISTS ABE_TALEND_WH; -- Drop the test warehouse DROP ROLE IF EXISTS ABE_TALEND_ROLE; -- Drop the test role DROP USER IF EXISTS ABE_TALEND_USER; -- Drop the test user -- Create necessary objects CREATE WAREHOUSE ABE_TALEND_WH; -- Create the warehouse CREATE DATABASE ABE_TALEND_DB; -- Create the test database CREATE SCHEMA ABE_TALEND_DB.ABE; -- Create the schema "ABE" in the test database -- Create the test user CREATE OR REPLACE USER ABE_TALEND_USER PASSWORD = 'pwd!' -- Replace with a secure password LOGIN_NAME = 'ABE_TALEND_USER' FIRST_NAME = 't' LAST_NAME = 'tt' EMAIL = 't.tt@qlik.com' -- Replace with a valid email MUST_CHANGE_PASSWORD = FALSE DEFAULT_WAREHOUSE = ABE_TALEND_WH; -- Grant necessary permissions GRANT USAGE ON WAREHOUSE ABE_TALEND_WH TO ROLE SYSADMIN; -- Grant warehouse access to SYSADMIN CREATE ROLE IF NOT EXISTS ABE_TALEND_ROLE; -- Create the custom role GRANT ROLE ABE_TALEND_ROLE TO USER ABE_TALEND_USER; -- Assign the role to the user GRANT ALL PRIVILEGES ON DATABASE ABE_TALEND_DB TO ROLE ABE_TALEND_ROLE; -- Full access to the database GRANT ALL PRIVILEGES ON ALL SCHEMAS IN DATABASE ABE_TALEND_DB TO ROLE ABE_TALEND_ROLE; -- Full access to all schemas GRANT ALL PRIVILEGES ON ALL TABLES IN SCHEMA ABE_TALEND_DB.ABE TO ROLE ABE_TALEND_ROLE;-- Full access to all tables in schema GRANT USAGE ON WAREHOUSE ABE_TALEND_WH TO ROLE ABE_TALEND_ROLE; -- Grant warehouse usage to custom role -- Verify user creation SHOW USERS; -- Create a test table and validate setup CREATE TABLE ABE_TALEND_DB.ABE.ABETABLE ( NAME VARCHAR(100) ); -- Test data retrieval SELECT * FROM ABE_TALEND_DB.ABE.ABETABLE; - For this step, please assign the public key to the Snowflake test user created earlier. To do this, you'll need do the following:

- Locate public.key and open it in an editor (such as Notepad++)

- Copy the public key displayed between BEGIN PUBLIC KEY and END PUBLIC KEY

- In the Snowflake environment, open a worksheet or query editor to run the following SQL statements. You will add the previously generated public key to our user and be sure to replace it with your own key.

DESCRIBE USER

And to verify that the key was successfully added.ALTER USER ABE_TALEND_USER SET RSA_PUBLIC_KEY=public key '; DESCRIBE USER ABE_TALEND_USER;

- Now we’ll verify that the configuration is correct. In your Snowflake environment, open a worksheet or query editor, run the following SQL statements, and copy the results (an sha256 hash of our public key ) into a Notepad or any text editor for reference.

DESC USER ABE_TALEND_USER; SELECT SUBSTR((SELECT "value" FROM TABLE(RESULT_SCAN(LAST_QUERY_ID())) WHERE "property" = 'RSA_PUBLIC_KEY_FP'), LEN('SHA256:') + 1);

Using OpenSSL, we will calculate the SHA-256 hash of the public key and compare it with the one previously generated by Snowflake to ensure they are matched.

To do that use the following OpenSSL command:openssl rsa -pubin -in public.key -outform DER | openssl dgst -sha256 -binary | openssl enc -base64

If the hash matches, proceed to Talend Studio configuration.

Configuring Talend Studio at Job Level :

- Launch your Talend Studio and drag both tSetKeyStore and tDBConnection(Snowflake) components from Palette to Designer Tab

- In the Basic settings of tSetKeyStore component, enter the path to the keystore .p12 file in double quotation marks in the KeyStore file field :

- Use the Key Alias set in the keystore. p12 file before for Snowflake DB Connection ("abe", for this example) :

- Please test the connection to see if the key-pair authentication you set up works

Related Content

Talend-Job-using-key-pair-authentication-for-Snowflake-fails

Environment

Talend Studio 8.0.1

-

Qlik Data Manager displays duplicated ODBC sources

ODBC data sources are duplicated in the Qlik Data Manager. Resolution Verify the \QvOdbcConnectorPackage folder in C:\Program Files\Common Files\Qlik\... Show MoreODBC data sources are duplicated in the Qlik Data Manager.

Resolution

Verify the \QvOdbcConnectorPackage folder in C:\Program Files\Common Files\Qlik\Custom Data. A duplicate likely exists.

Move the backup to a different location.

Cause

A copy of the C:\Program Files\Common Files\Qlik\Custom Data\QvOdbcConnectorPackage folder was created during an upgrade or patch preparation.

Environment

- Qlik Sense Enterprise on Windows