Featured Content

-

How to contact Qlik Support

Qlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical e... Show MoreQlik offers a wide range of channels to assist you in troubleshooting, answering frequently asked questions, and getting in touch with our technical experts. In this article, we guide you through all available avenues to secure your best possible experience.

For details on our terms and conditions, review the Qlik Support Policy.

Index:

- Support and Professional Services; who to contact when.

- Qlik Support: How to access the support you need

- 1. Qlik Community, Forums & Knowledge Base

- The Knowledge Base

- Blogs

- Our Support programs:

- The Qlik Forums

- Ideation

- How to create a Qlik ID

- 2. Chat

- 3. Qlik Support Case Portal

- Escalate a Support Case

- Phone Numbers

- Resources

Support and Professional Services; who to contact when.

We're happy to help! Here's a breakdown of resources for each type of need.

Support Professional Services (*) Reactively fixes technical issues as well as answers narrowly defined specific questions. Handles administrative issues to keep the product up-to-date and functioning. Proactively accelerates projects, reduces risk, and achieves optimal configurations. Delivers expert help for training, planning, implementation, and performance improvement. - Error messages

- Task crashes

- Latency issues (due to errors or 1-1 mode)

- Performance degradation without config changes

- Specific questions

- Licensing requests

- Bug Report / Hotfixes

- Not functioning as designed or documented

- Software regression

- Deployment Implementation

- Setting up new endpoints

- Performance Tuning

- Architecture design or optimization

- Automation

- Customization

- Environment Migration

- Health Check

- New functionality walkthrough

- Realtime upgrade assistance

(*) reach out to your Account Manager or Customer Success Manager

Qlik Support: How to access the support you need

1. Qlik Community, Forums & Knowledge Base

Your first line of support: https://community.qlik.com/

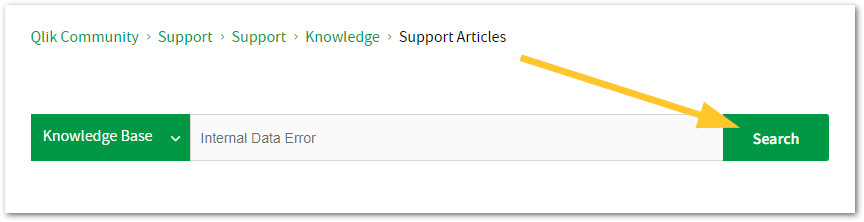

Looking for content? Type your question into our global search bar:

The Knowledge Base

Leverage the enhanced and continuously updated Knowledge Base to find solutions to your questions and best practice guides. Bookmark this page for quick access!

- Go to the Official Support Articles Knowledge base

- Type your question into our Search Engine

- Need more filters?

- Filter by Product

- Or switch tabs to browse content in the global community, on our Help Site, or even on our Youtube channel

Blogs

Subscribe to maximize your Qlik experience!

The Support Updates Blog

The Support Updates blog delivers important and useful Qlik Support information about end-of-product support, new service releases, and general support topics. (click)The Qlik Design Blog

The Design blog is all about product and Qlik solutions, such as scripting, data modelling, visual design, extensions, best practices, and more! (click)The Product Innovation Blog

By reading the Product Innovation blog, you will learn about what's new across all of the products in our growing Qlik product portfolio. (click)Our Support programs:

Q&A with Qlik

Live sessions with Qlik Experts in which we focus on your questions.Techspert Talks

Techspert Talks is a free webinar to facilitate knowledge sharing held on a monthly basis.Technical Adoption Workshops

Our in depth, hands-on workshops allow new Qlik Cloud Admins to build alongside Qlik Experts.Qlik Fix

Qlik Fix is a series of short video with helpful solutions for Qlik customers and partners.The Qlik Forums

- Quick, convenient, 24/7 availability

- Monitored by Qlik Experts

- New releases publicly announced within Qlik Community forums (click)

- Local language groups available (click)

Ideation

Suggest an idea, and influence the next generation of Qlik features!

Search & Submit Ideas

Ideation GuidelinesHow to create a Qlik ID

Get the full value of the community.

Register a Qlik ID:

- Go to register.myqlik.qlik.com

If you already have an account, please see How To Reset The Password of a Qlik Account for help using your existing account. - You must enter your company name exactly as it appears on your license or there will be significant delays in getting access.

- You will receive a system-generated email with an activation link for your new account. NOTE, this link will expire after 24 hours.

If you need additional details, see: Additional guidance on registering for a Qlik account

If you encounter problems with your Qlik ID, contact us through Live Chat!

2. Chat

Incidents are supported through our Chat, by clicking Chat Now on any Support Page across Qlik Community.

To raise a new issue, all you need to do is chat with us. With this, we can:

- Answer common questions instantly through our chatbot

- Have a live agent troubleshoot in real time

- With items that will take further investigating, we will create a case on your behalf with step-by-step intake questions.

3. Qlik Support Case Portal

Log in to manage and track your active cases in the Case Portal. (click)

Please note: to create a new case, it is easiest to do so via our chat (see above). Our chat will log your case through a series of guided intake questions.

Your advantages:

- Self-service access to all incidents so that you can track progress

- Option to upload documentation and troubleshooting files

- Option to include additional stakeholders and watchers to view active cases

- Follow-up conversations

When creating a case, you will be prompted to enter problem type and issue level. Definitions shared below:

Problem Type

Select Account Related for issues with your account, licenses, downloads, or payment.

Select Product Related for technical issues with Qlik products and platforms.

Priority

If your issue is account related, you will be asked to select a Priority level:

Select Medium/Low if the system is accessible, but there are some functional limitations that are not critical in the daily operation.

Select High if there are significant impacts on normal work or performance.

Select Urgent if there are major impacts on business-critical work or performance.

Severity

If your issue is product related, you will be asked to select a Severity level:

Severity 1: Qlik production software is down or not available, but not because of scheduled maintenance and/or upgrades.

Severity 2: Major functionality is not working in accordance with the technical specifications in documentation or significant performance degradation is experienced so that critical business operations cannot be performed.

Severity 3: Any error that is not Severity 1 Error or Severity 2 Issue. For more information, visit our Qlik Support Policy.

Escalate a Support Case

If you require a support case escalation, you have two options:

- Request to escalate within the case, mentioning the business reasons.

To escalate a support incident successfully, mention your intention to escalate in the open support case. This will begin the escalation process. - Contact your Regional Support Manager

If more attention is required, contact your regional support manager. You can find a full list of regional support managers in the How to escalate a support case article.

Phone Numbers

When other Support Channels are down for maintenance, please contact us via phone for high severity production-down concerns.

- Qlik Data Analytics: 1-877-754-5843

- Qlik Data Integration: 1-781-730-4060

- Talend AMER Region: 1-800-810-3065

- Talend UK Region: 44-800-098-8473

- Talend APAC Region: 65-800-492-2269

Resources

A collection of useful links.

Qlik Cloud Status Page

Keep up to date with Qlik Cloud's status.

Support Policy

Review our Service Level Agreements and License Agreements.

Live Chat and Case Portal

Your one stop to contact us.

Recent Documents

-

The Qlik Sense Monitoring Applications for Cloud and On Premise

Qlik Sense Enterprise Client-Managed offers a range of Monitoring Applications that come pre-installed with the product. Qlik Cloud offers the Data Ca... Show MoreQlik Sense Enterprise Client-Managed offers a range of Monitoring Applications that come pre-installed with the product.

Qlik Cloud offers the Data Capacity Reporting App for customers on a capacity subscription, and additionally customers can opt to leverage the Qlik Cloud Monitoring apps.

This article provides information on available apps for each platform.

Content:

- Qlik Cloud

- Data Capacity Reporting App

- Access Evaluator for Qlik Cloud

- Answers Analyzer for Qlik Cloud

- App Analyzer for Qlik Cloud

- Automation Analyzer for Qlik Cloud

- Entitlement Analyzer for Qlik Cloud

- Reload Analyzer for Qlik Cloud

- Report Analyzer for Qlik Cloud

- How to automate the Qlik Cloud Monitoring Apps

- Other Qlik Cloud Monitoring Apps

- OEM Dashboard for Qlik Cloud

- Monitoring Apps for Qlik Sense Enterprise on Windows

- Operations Monitor and License Monitor

- App Metadata Analyzer

- The Monitoring & Administration Topic Group

- Other Apps

Qlik Cloud

Data Capacity Reporting App

The Data Capacity Reporting App is a Qlik Sense application built for Qlik Cloud, which helps you to monitor the capacity consumption for your license at both a consolidated and a detailed level. It is available for deployment via the administration activity center in a tenant with a capacity subscription.

The Data Capacity Reporting App is a fully supported app distributed within the product. For more information, see Qlik Help.

Access Evaluator for Qlik Cloud

The Access Evaluator is a Qlik Sense application built for Qlik Cloud, which helps you to analyze user roles, access, and permissions across a tenant.

The app provides:

- User and group access to spaces

- User, group, and share access to apps

- User roles and associated role permissions

- Group assignments to roles

For more information, see Qlik Cloud Access Evaluator.

Answers Analyzer for Qlik Cloud

The Answers Analyzer provides a comprehensive Qlik Sense dashboard to analyze Qlik Answers metadata across a Qlik Cloud tenant.

It provides the ability to:

- Track user questions across knowledgebases, assistants, and source documents

- Analyze user behavior to see what types of questions users are asking about what content

- Optimize knowledgebase sizes and increase answer accuracy by removing inaccurate, unused, and unreferenced documents

- Track and monitor page size to quota

- Ensure that data is kept up to date by monitoring knowledgebase index times

- Tie alerts into metrics, (e.g. a knowledgebase hasn't been updated in over X days)

For more information, see Qlik Cloud Answers Analyzer.

App Analyzer for Qlik Cloud

The App Analyzer is a Qlik Sense application built for Qlik Cloud, which helps you to analyze and monitor Qlik Sense applications in your tenant.

The app provides:

- User sessions by app, sheets viewed

- Large App consumption monitoring

- App, Table and Field memory footprints

- Synthetic keys and island tables to help improve app development

- Threshold analysis for fields, tables, rows and more

- Reload times and peak RAM utilization by app

For more information, see Qlik Cloud App Analyzer.

Automation Analyzer for Qlik Cloud

The Automation Analyzer is a Qlik Sense application built for Qlik Cloud, which helps you to analyze and monitor Qlik Application Automation runs in your tenant.

Some of the benefits of this application are as follows:

- Track number of automations by type and by user

- Analyze concurrent automations

- Compare current month vs prior month runs

- Analyze failed runs - view all schedules and their statuses

- Tie in Qlik Alerting

For more information, see Qlik Cloud Automation Analyzer.

Entitlement Analyzer for Qlik Cloud

The Entitlement Analyzer is a Qlik Sense application built for Qlik Cloud, which provides Entitlement usage overview for your Qlik Cloud tenant for user-based subscriptions.

The app provides:

- Which users are accessing which apps

- Consumption of Professional, Analyzer and Analyzer Capacity entitlements

- Whether you have the correct entitlements assigned to each of your users

- Where your Analyzer Capacity entitlements are being consumed, and forecasted usage

For more information, see The Entitlement Analyzer.

Reload Analyzer for Qlik Cloud

The Reload Analyzer is a Qlik Sense application built for Qlik Cloud, which provides an overview of data refreshes for your Qlik Cloud tenant.

The app provides:

- The number of reloads by type (Scheduled, Hub, In App, API) and by user

- Data connections and used files of each app’s most recent reload

- Reload concurrency and peak reload RAM

- Reload tasks and their respective statuses

For more information, see Qlik Cloud Reload Analyzer.

Report Analyzer for Qlik Cloud

The Report Analyzer provides a comprehensive dashboard to analyze metered report metadata across a Qlik Cloud tenant.

The app provides:

- Current Month Reports Metric

- History of Reports Metric

- Breakdown of Reports Metric by App, Event, Executor (and time periods)

- Failed Reports

- Report Execution Duration

For more information, see Qlik Cloud Report Analyzer.

How to automate the Qlik Cloud Monitoring Apps

Do you want to automate the installation, upgrade, and management of your Qlik Cloud Monitoring apps? With the Qlik Cloud Monitoring Apps Workflow, made possible through Qlik's Application Automation, you can:

- Install/update the apps with a fully guided, click-through installer using an out-of-the-box Qlik Application Automation template.

- Programmatically rotate the API key that is required for the data connection on a schedule using an out-of-the-box Qlik Application Automation template. This ensures that the data connection is always operational.

- Get alerted whenever a new version of a monitoring app is available using Qlik Data Alerts.

For more information and usage instructions, see Qlik Cloud Monitoring Apps Workflow Guide.

Other Qlik Cloud Monitoring Apps

OEM Dashboard for Qlik Cloud

The OEM Dashboard is a Qlik Sense application for Qlik Cloud designed for OEM partners to centrally monitor usage data across their customers’ tenants. It provides a single pane to review numerous dimensions and measures, compare trends, and quickly spot issues across many different areas.

Although this dashboard is designed for OEMs, it can also be used by partners and customers who manage more than one tenant in Qlik Cloud.

For more information and to download the app and usage instructions, see Qlik Cloud OEM Dashboard & Console Settings Collector.

With the exception of the Data Capacity Reporting App, all Qlik Cloud monitoring applications are provided as-is and are not supported by Qlik. Over time, the APIs and metrics used by the apps may change, so it is advised to monitor each repository for updates and to update the apps promptly when new versions are available.

If you have issues while using these apps, support is provided on a best-efforts basis by contributors to the repositories on GitHub.

Monitoring Apps for Qlik Sense Enterprise on Windows

Operations Monitor and License Monitor

The Operations Monitor loads service logs to populate charts covering performance history of hardware utilization, active users, app sessions, results of reload tasks, and errors and warnings. It also tracks changes made in the QMC that affect the Operations Monitor.

The License Monitor loads service logs to populate charts and tables covering token allocation, usage of login and user passes, and errors and warnings.

For a more detailed description of the sheets and visualizations in both apps, visit the story About the License Monitor or About the Operations Monitor that is available from the app overview page, under Stories.

Basic information can be found here:

The License Monitor

The Operations MonitorBoth apps come pre-installed with Qlik Sense.

If a direct download is required: Sense License Monitor | Sense Operations Monitor. Note that Support can only be provided for Apps pre-installed with your latest version of Qlik Sense Enterprise on Windows.

App Metadata Analyzer

The App Metadata Analyzer app provides a dashboard to analyze Qlik Sense application metadata across your Qlik Sense Enterprise deployment. It gives you a holistic view of all your Qlik Sense apps, including granular level detail of an app's data model and its resource utilization.

Basic information can be found here:

App Metadata Analyzer (help.qlik.com)

For more details and best practices, see:

App Metadata Analyzer (Admin Playbook)

The app comes pre-installed with Qlik Sense.

The Monitoring & Administration Topic Group

Looking to discuss the Monitoring Applications? Here we share key versions of the Sense Monitor Apps and the latest QV Governance Dashboard as well as discuss best practices, post video tutorials, and ask questions.

Other Apps

LogAnalysis App: The Qlik Sense app for troubleshooting Qlik Sense Enterprise on Windows logs

Sessions Monitor, Reloads-Monitor, Log-Monitor

Connectors Log AnalyzerAll Other Apps are provided as-is and no ongoing support will be provided by Qlik Support.

-

How to send an Excel report as an attachment using Qlik Application Automation

Environment Qlik Sense Enterprise SaaS Qlik Application Automation This article describes how an Excel file can be sent as an attachment by using th... Show MoreEnvironment

- Qlik Sense Enterprise SaaS

- Qlik Application Automation

This article describes how an Excel file can be sent as an attachment by using the Send Mail block in Qlik Application Automation.

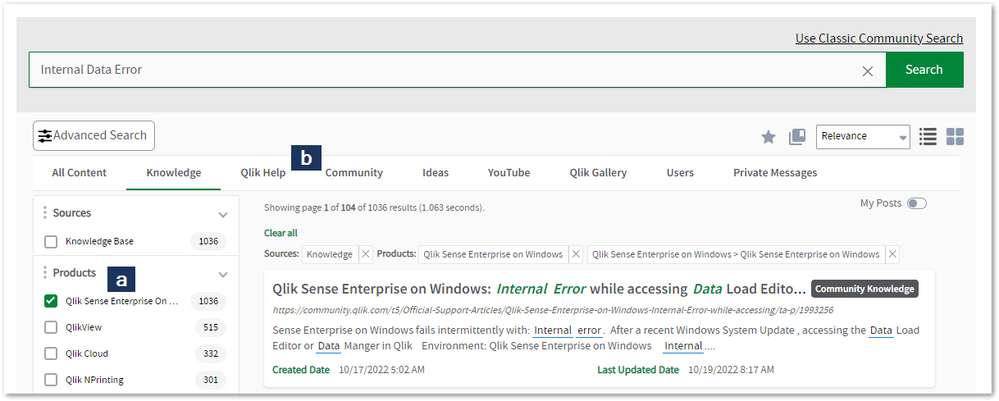

Automation Structure

The creation of an Excel report from a Qlik Sense straight table is explained in the first part of this article.

Let's walk through the blocks used in the automation workflow to send the Excel file as an attachment using the "Send Mail" block.

- Open the Excel file saved in Microsoft SharePoint using the "Open File on Microsoft SharePoint" block.

- Use the "Send Mail" block from Mail Connector to send the Excel file as an attachment via email.

A JSON file containing the above automation is attached to this article.

Please follow the steps provided in the How to import automation from a JSON file article to import the automation.

Related Content

-

How to resolve Verification Error - x Qlik NPrinting webrenderer can reach Qlik ...

This article describes how to resolve the NPrinting connection verification error: x Qlik NPrinting webrenderer can reach Qlik Sense hub error Env... Show MoreThis article describes how to resolve the NPrinting connection verification error:

x Qlik NPrinting webrenderer can reach Qlik Sense hub errorEnvironment

- NPrinting: all versions

- Qlik Sense: all versions

- multi-node QS proxy environment

- NLB (Network Load Balancer or Alias URL) server address used in front of cluster of Qlik Sense proxy nodes (which are linked to a Virtual Proxy. Proxy nodes will not work with NPrinting unless they are linked to a QS virtual proxy)

Resolution

- Although it is possible to use an NLB address in front of your QS proxy nodes that are used by NPrinting, the error generated in the NP connection verification process will appear as described in the article title

- This error can safely be ignored as this does not affect generation of NPrinting connections

- To eliminate the 'error' message, you would need to update your NPrinting connection to use a virtual proxy node address/computer name behind the NLB address . It must be the computer name and not an alias url).

- The proxy node used must be linked to a virtual proxy with the following required attribute

- Microsoft Windows NTML authentication on the Qlik Sense proxy. (SAML and JWT are not supported. If your virtual proxy uses SAML or JWT authentication, you need to add a new virtual proxy with NTLM enabled for Qlik NPrinting connections).

- A link between the proxy node and virtual proxy.

- For all NPrinting to Qlik Sense requirements visit https://help.qlik.com/en-US/nprinting/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm#anchor-1

Related Content

- https://help.qlik.com/en-US/nprinting/Content/NPrinting/Troubleshooting/Verify-connection-to-Sense-errors.htm

- https://help.qlik.com/en-US/nprinting/Content/NPrinting/DeployingQVNprinting/NPrinting-with-Sense.htm#Requirem

Internal Investigation ID

- QB-2871

-

Qlik Cloud Analytics: Manual Reload results may not be displayed in the Schedule...

The reload history found in the Catalog (1) > App menu (2) > Schedule (3) > History (4) > does not display results of manually initiated reloads. Res... Show MoreThe reload history found in the Catalog (1) > App menu (2) > Schedule (3) > History (4) > does not display results of manually initiated reloads.

Resolution

To review manual triggers:

- Go to the Catalog

- Click the App menu (...)

- Open Details

- Open Reload History

Improvements to this section of the tenant UI are planned for the future. No details or estimates can be provided as of now.

Cause

This is a current limitation. Only reloads triggered on a schedule will be displayed in this section of the tenant UI.

Environment

- Qlik Cloud Analytics

-

Qlik Data Gateway reloads fail when triggering on a schedule: The engine error c...

A scheduled reload using Qlik Data Gateway fails with the following error: Internal error. (Connector error: Unknown reason:. Connector process starte... Show MoreA scheduled reload using Qlik Data Gateway fails with the following error:

Internal error. (Connector error: Unknown reason:. Connector process started but gRPC server failed to initialize after 3 attempts (DirectAccess-1500)) The engine error code: EDC_ERROR:22013

Manual reloads may work as expected, but scheduled reloads continue to error out.

Resolution

The error indicates a resource shortage.

Increase the Qlik Data Gateway VM server CPU and RAM to meet the system requirements. See System prerequisites for details.

Environment

- Qlik Cloud Analytics

- Qlik Data Gateway

-

How to: Getting started with the Microsoft Outlook 365 connector in Qlik Applica...

This article is intended to get started with the Microsoft Outlook 365 connector in Qlik Application Automation. Authentication and Authorization To... Show MoreThis article is intended to get started with the Microsoft Outlook 365 connector in Qlik Application Automation.

Authentication and Authorization

To authenticate with Microsoft Outlook 365 you create a new connection. The connector makes use of OAuth2 for authentication and authorization purposes. You will be prompted with a popup screen to consent a list of permissions for Qlik Application Automation to use. The Oauth scopes that are requested are:

- Mail.Send

- User.Read

- Offline_access

Sending Email with the Microsoft Outlook 365 connector

The scope of this connector has been limited to only sending emails. Currently, we do not enable sending email attachments and are looking to provide this functionality in the future. The suggested approach is to upload files to a different platform, e.g. Onedrive or Dropbox and create a sharing link that can be included in the email body.

The following parameters are available on the Send Email block:

- To: A comma separated list of recipients;

- CC: A comma separated list of recipients to which the email should be copied;

- BCC: A comma separated list of recipients to which the email should be blind copied;

- Subject: A title for your email;

- Type: Choose between plain text email or HTML;

- Body: The content of your email. You can use HTML for your email if chosen as the type.

Generating a report and sending an email with the report

As we do not currently support email attachments, we need to first generate a sharing link in Onedrive or an alternative file sharing service. The following automation shows how to generate a report from a Qlik Sense app, upload the report to Microsoft Onedrive, create a sharing link and send out an email with the sharing link in the body. This automation is also attached as JSON in the attachment to this post.

Environment:

-

Qlik Cloud: How to see if claims for 'USER' and 'GROUPS' are passing correctly f...

The claims can be accessed via an API Endpoint: For User claims: Please log out of the tenant and re-authenticate using the new identity provider con... Show MoreThe claims can be accessed via an API Endpoint:

For User claims:

Please log out of the tenant and re-authenticate using the new identity provider connection. Once logged in, change the URL in the address bar to point to https://<tenanthostname>/api/v1/diagnose-claims This will return the JSON of the claims information of your IDP sent to the tenant. Here is a slightly redacted example:

Explanations of the different sections in the diagnose-claims endpoint:

internalClaims: Summary of the user in Qlik Cloud. Usually derived from the IDP claims, but also contains information specific to Qlik Cloud access control (like the user’s roles).claimsFromIdp: The raw claims received from the IDP. For Azure AD, most likely this comes directly from Azure AD’s ID token, however groups from the ID token are not imported to Qlik Cloud, the Microsoft Graph API is used to retrieve them instead.extraClaims: This section only applies to Azure AD at the time of writing this article, since groups are fetched from Microsoft Graph API. If groups were retrieved correctly from Microsoft Graph API , they will be shown here.mappedClaims: Applies the extra claims mapping on top of the raw IDP claims to get the final set of claims used for the Qlik Cloud user.

For Group Claims:

Once logged in, change the URL in the address bar to point to https://<tenanthostname>/api/v1/groups This will return the JSON of the claims information of your Groups sent to the tenant.

Environment

The information in this article is provided as-is and to be used at your own discretion. Depending on tool(s) used, customization(s), and/or other factors ongoing support on the solution below may not be provided by Qlik Support.

-

Qlik Stitch: How to submit feature request

Feature requests are submitted to Qlik through our Ideation program, which is accessible via the Qlik Ideation Portal and is available for registered ... Show MoreFeature requests are submitted to Qlik through our Ideation program, which is accessible via the Qlik Ideation Portal and is available for registered Qlik customers.

What would be applicable as a feature request for Qlik Stitch?

-

Certain fields you need are available through specific integrations, but are not currently supported by Stitch.

-

You're looking for additional flexibility or functionality in Stitch that isn’t yet available.

If either of these applies to you, we’d love to hear from you!

For instructions on submitting an idea or proposing an improvement, see How To Submit an Idea or Propose and Improvement For Qlik Products.

Environment

-

-

Qlik Stitch: How to detect Potential Data Discrepancies?

If you notice certain fields not receiving data from a specific date onward, or if data replication unexpectedly stops for some fields, it may indicat... Show MoreIf you notice certain fields not receiving data from a specific date onward, or if data replication unexpectedly stops for some fields, it may indicate a potential data discrepancy issue. However, before diving into investigation or troubleshooting, it's crucial to first confirm whether a discrepancy actually exists.

First To Go

To confirm whether a discrepancy exists, you will need to check the following things.

- Please confirm that these fields contain non-Null values in your source data. The reason we ask is that Stitch will only create a column on your table downstream once at least one value is returned for that field. Empty column can not be replicated to the destination because stitch cannot identify what is the data type of this column.

- When data is deemed incompatible by the destination, the record will be “rejected” and logged in a table called _sdc_rejected. What looks like missing data may actually be a compatibility issue. If you’re missing data, the first place you should look is in the _sdc_rejected table in the integration’s schema. Please refer to stitch documentation rejected-records-system-table for more info on this table and how to use it to troubleshoot.

Stitch will create the _sdc_primary_keys even if none of the tables in the integration have a Primary Key. Primary Key data will be added to the table when and if a table is replicated that has a defined Primary Key. This means it’s possible to have an empty _sdc_primary_keys table.

- If the missing records were created very recently, or if Stitch is replicating a large historical data set, you may need to wait for an update of your data to be completed before they appear in your destination.

If you are not in all of above situations, please feel free to contact Support for further investigation or troubleshooting.

Related Content

To follow the standard procedures for data discrepancy issues, please kindly provide the relevant information according to our document here:

data-discrepancy-troubleshooting-guide

Environment

-

Qlik NPrinting Engine Offline status due to RabbitMQ corruption

The status of the Qlik NPrinting Engine consistently appears as Offline on the Engine Manager page. The Qlik NPrinting Engine Logs read: RabbitMq serv... Show MoreThe status of the Qlik NPrinting Engine consistently appears as Offline on the Engine Manager page.

The Qlik NPrinting Engine Logs read:

RabbitMq server is not connected - trying diagnoser queue creation again in 5 seconds

Qlik.NPrinting.Engine 24.4.14.0 Qlik.NPrinting.Engine.EngineService 20250521T190411.917+05:30

ERROR NBHI-DC-APP-69 RabbitMq service is not connected - trying again in 60 seconds.

ERROR: RabbitMQ.Client.Exceptions.BrokerUnreachableException: None of the specified endpoints were reachable

---> RabbitMQ.Client.Exceptions.OperationInterruptedException: The AMQP operation was interrupted:

AMQP close-reason, initiated by Peer, code=541, text='INTERNAL_ERROR - access to vhost '/' refused for user 'client_engine':

vhost '/' is down', classId=10, methodId=40Additionally, the RabbitMQ logs may read:

EXTERNAL login refused: user 'client_engine' - invalid credentials

[info] closing AMQP connection ([::1]:60918 -> [::1]:5672)

[info] accepting AMQP connection ([::1]:60921 -> [::1]:5672)

[error] Error on AMQP connection ([::1]:60921 -> [::1]:5672, state: starting):

[error] EXTERNAL login refused: user 'client_engine' - invalid credentials

[info] closing AMQP connection ([::1]:60921 -> [::1]:5672)For information about how to obtain log files, see How to collect the Qlik NPrinting Platform Log Files.

Resolution

Initial checks:

- Follow the instructions in Qlik NPrinting Engine Offline status

- Continue with How to Resolve Qlik NPrinting Engine Offline Status.

If the issue persists, the RabbitMQ installation needs to be repaired. To do so effectively may require multiple rounds of reinstalling the Qlik NPrinting engine, as well as the RabbitMQ certificates to be recreated.

Our expected result is:

- The Qlik NPrinting Engine comes online

- RabbitMQ logs do not include errors

- We do not receive errors when checking the RabbitMQ status

RabbitMQ repair:

All Qlik NPrinting services must be running for this procedure to work.

- Recreate the RabbitMQ certificates (see Recreate the messaging service certificates).

- Reinstall the Qlik NPrinting engine (see Installing Qlik NPrinting Engine).

- Does the issue persist? Review the Qlik NPrinting and RabbitMQ logs to verify if the two errors (RabbitMq service is not connected and EXTERNAL login refused: user 'client_engine' - invalid credentials) continue to be logged.

- Check the RabbitMQ service status (see Check the node status on the RabbitMQ console).

- Repeat: Recreate the RabbitMQ certificates (see Recreate the messaging service certificates).

- Repeat: Reinstall the Qlik NPrinting engine (see Installing Qlik NPrinting Engine).

- Verify RabbitMQ permissions for the client_engine user (see Check the messaging service users and permissions).

- Repeat: Check the RabbitMQ service status (see Check the node status on the RabbitMQ console).

- If you continue to see errors when checking the RabbitMQ status (rabbitmqctl.bat status), repeat from Step 1.

This cycle should be completed only twice at the most, but it is necessary to reestablish a connection between the Qlik NPrinting Engine and RabbitMQ.

If this fails to resolve the issue, log a support case with Qlik Support, including detailed information about all troubleshooting steps that have already been performed.

Cause

This indicates the RabbitMQ installation is corrupted or otherwise damaged. Multiple root causes exist:

- A Qlik NPrinting Upgrade corrupted the files

- C:\ Drive was full, or the Qlik NPrinting installation drive was full

- RabbitMQ crashed due to Antivirus scanning

- Unsupported items; for Qlik Sense see Qlik NPrinting and Qlik Sense Object Support and Limitations, for QlikView see Qlik NPrinting: Unsupported QlikView Document items, System Configurations and other limitations.

Environment

- Qlik NPrinting

-

Qlik Cloud Analytics Basic Users are automatically promoted to Full User entitle...

Users previously assigned a Basic User entitlement have unexpectedly been promoted to Full User entitlement. This is working as expected and will hap... Show MoreUsers previously assigned a Basic User entitlement have unexpectedly been promoted to Full User entitlement.

This is working as expected and will happen when a Basic User is granted any additional permissions. Source: Types of user entitlements.

An exception is the Collaboration Platform role, see Qlik Cloud Analytics: Assigning the Collaboration Platform User role to a Basic User does not promote it to full.

How to determine what elevates a user

To determine what promotes a user to a Full User:

- Log in and go to the Cloud Administration center

- Go to User Management

- Hover (a) over the user's Information icon

It is also possible to use the Access Evaluator App to have a more holistic overview over assignments.

What to remove

To avoid users being promoted to Full Users, remove any assignment, both in single spaces and at a global level.

Spaces

Verify the users are not assigned any roles other than than Has restricted view.

Be careful when using the Anyone group, since assigning any other role to this group will promote all users to Full.

This includes the default data space: Default_Data_Space created on tenant setup. Remove the Anyone member:

- Go to Spaces

- Click the ellipses menu (...) and choose Manage members

- Remove Anyone by through the ellipses menu (...) and clicking Remove

Global

Turn off Auto assign for all Security roles in the Administration Center.

- Open the Administration Center

- Open Users

- Switch to the Permissions tab

- Switch Auto assign to Off on all Security roles:

Specific users

If a user was manually assigned a role, the role has to be removed again manually.

- Open the Administration Center

- Open Users

- Switch All Users tab if not already selected

- Locate the user and click the ... menu (a), then choose Edit roles (b) and remove all previously assigned roles

Environment

- Qlik Cloud Analytics

-

Qlik Data Gateway: CVS or Parquet File? Which one is faster?

Is it faster to use a CSV file versus a Parquet file? Will one load to Qlik Cloud faster than the other? The answer to this depends on the data. The A... Show MoreIs it faster to use a CSV file versus a Parquet file? Will one load to Qlik Cloud faster than the other?

The answer to this depends on the data.

The Analytics Engine makes calls to read data from the file as needed (streaming). While parquet files have built-in compression, which can affect how quickly certain data can be accessed, an equivalent CSV might be larger in raw data volume.

Environment

- Qlik Cloud

- Qlik Data Gateway

-

Qlik Cloud Analytics Non-sql CREATE NONCLUSTERED INDEX fails

The following error occurred in Qlik Cloud Analytics: Unexpected token: 'NONCLUSTERED', expected: 'Relationship' The engine error code: EDC_ERROR:1100... Show MoreThe following error occurred in Qlik Cloud Analytics:

Unexpected token: 'NONCLUSTERED', expected: 'Relationship' The engine error code: EDC_ERROR:11005 The error occurred here: CREATE >>>>>>NONCLUSTERED<<<<<< INDEX IX_WebProducts_ProductID ON #WebProducts(ProductID) !EXECUTE_NON_SELECT_QUERY

Resolution

Add the following SQL statement to resolve the issue:

SQL CREATE NONCLUSTERED INDEX IX_YourIndexName1234 ON "test_db".SalesLT.Customer (LastName DESC) !EXECUTE_NON_SELECT_QUERY;

Environment

- Qlik Cloud Analytics

-

Qlik Cloud Analytics: show image from app's media library inside a visualization

Some Qlik Cloud visualisations allow images to be shown from external URLs. Is it possible to embed images that were uploaded to the media library? ... Show MoreSome Qlik Cloud visualisations allow images to be shown from external URLs.

Is it possible to embed images that were uploaded to the media library?

Resolution

We currently don't have a global repository for images in Qlik Cloud, but each app has its own media library, where images can be uploaded.

To link them:

- Make sure you are authenticated on the tenant

- Get the list of all media contained in an app by going to https://TENANT.REGION.qlikcloud.com/api/v1/apps/APPID/media/list, replacing TENANT, REGION, and APPID with the correct values

- Get the link for each image

Example:

"type": "image","id": "UserAnalyzer.png","link": "/api/v1/apps/1aa5c924-72cb-405a-bb60-8b5118e4b299/media/files/UserAnalyzer.png","name": "UserAnalyzer.png" - Use the resulting /api/v1... URL for your visualization

Using a full URL to display the images (example: https://TENANT.REGION.qlikcloud.com/api/v1/apps/APPID/media/files/FILENAME.png) will work inside the app when authenticated on the tenant, but it will not work in other scenarios, such as mashups or the reporting service.

Make sure to use a relative URL (example: /api/v1/apps/APPID/media/files/FILENAME.png) instead.For suggestions on how to improve the media library, please log an idea at Qlik Ideation.

Environment

- Qlik Cloud Analytics

-

Release Notes Qlik Sense PostgreSQL installer version 1.2.0 to 2.0.0

The following release notes cover the Qlik PostgreSQL installer (QPI) version 1.2.0 to 2.0.0. Content What's New2.0.0 May 2025 Release NotesKnown Limi... Show MoreThe following release notes cover the Qlik PostgreSQL installer (QPI) version 1.2.0 to 2.0.0.

Content

- What's New

- 2.0.0 May 2025 Release Notes

- Known Limitations (2.0.0)

- 1.4.0 December 2023 Release Notes

- Known Limitations (1.4.0)

- 1.3.0 May 2023Release Notes

- Known Limitations (1.3.0)

- 1.2.0 Release Notes

What's New

- The PostgreSQL version used by QPI has been updated to 14.17

- An upgrade of an already upgraded external instance is now possible

- Support for an upgrade of the embedded 14.8 database was added

- Silent installs and upgrades are supported beginning with Qlik PostgreSQL Installer 1.4.0 and later. QPI can now be used with silent commands to install or upgrade the PostgreSQL database. (SHEND-973)

2.0.0 May 2025 Release Notes

Improvement / Defect Details SHEND-2273 - Upgrade of Qlik Sense embedded PostgreSQL v 9.6, v 12.5, and v 14.8 databases to v 14.17. The PostgreSQL database is decoupled from Qlik Sense to become a Standalone PostgreSQL database with its own installer and can be upgraded independently of the installed Qlik Sense version.

- Upgrade of already decoupled standalone PostgreSQL databases versions 9.6, 12.5, and 14.8 to version 14.17.

QCB-28706 Upgraded PostgreSQL version to 14.17 to address the pg_dump vulnerability (CVE-2024-7348).

SUPPORT-335 Upgraded PostgreSQL version to 14.17 to address the libcurl vulnerability (CVE-2024-7264). QB-24990 Fixed an issue with upgrades of PostgreSQL if Qlik Sense was installed in a custom directory, such as D:\Sense. Known Limitations (2.0.0)

- Rollback is not supported.

- The database size is not checked against free disk space before a backup is taken.

- Windows Server 2012 R2 does not support PostgreSQL 14.8. QPI cannot be used on Windows Server 2012 R2.

- QPI will only upgrade a PostgreSQL instance that only contains Qlik Sense Databases (QSR, SenseServices, QSMQ, Licenses, QLogs, etc.)

1.4.0 December 2023 Release Notes

Improvement / Defect Details SHEND-1359, QB-15164: Add support for encoding special characters for Postgres password in QPI If the super user password is set to have certain special characters, QPI did not allow upgrading PostgreSQL using this password. The workaround was to set a different password, use QPI to upgrade the PostgreSQL database and then reset the password after the upgrade. This workaround is not required anymore with 1.4.0 QPI, as 1.4.0 supports encoded passwords. SHEND-1408: Qlik Sense services were not started again by QPI after the upgrade QPI failed to restart Qlik services after upgrading the PostgreSQL database. This has been fixed now. SHEND-1511: Upgrade not working from 9.6 database In QPI 1.3.0, upgrade from PostgreSQL 9.6 version to 14.8 was failing. This issue is fixed in QPI 1.4.0 version. QB-21082: Upgrade from May 23 Patch 3 to August 23 RC3 fails when QPI is used before attempting upgrade.

QB-20581: May 2023 installer breaks QRS if QPI was used with a patch before.Using QPI on a patched Qlik Sense version caused issues in the earlier version. This is now supported. Known Limitations (1.4.0)

- QB-24990: Cannot upgrade PostgreSQL if Qlik Sense was installed in a custom directory such as D:\Sense. See Qlik PostgreSQL Installer (QPI): No supported existing Qlik Sense PostgreSQL database found.

- Rollback is not supported.

- Database size is not checked against free disk space before a backup is taken.

- QPI can only upgrade bundled PostgreSQL database listening on the default port 4432. Using QPI to upgrade a standalone database or a database previously unbundled with QPI is not supported.

- Cannot migrate a 14.8 embedded DB to standalone

- Windows Server 2012R2 does not support PostgreSQL 14.8. QPI cannot be used on Windows Server 2012R2.

1.3.0 May 2023 Release Notes

-

How to Share Bookmarks in Qlik Sense

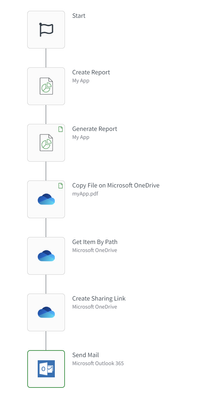

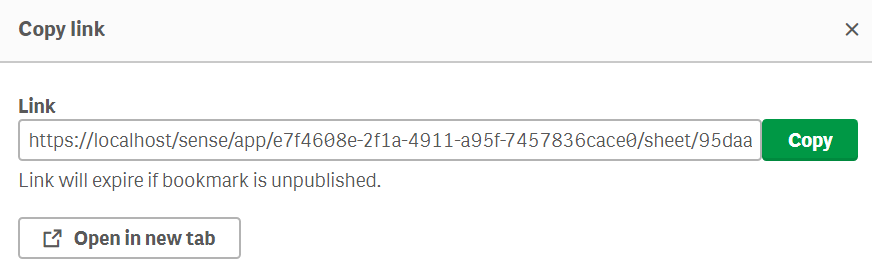

Create the Bookmark Create a bookmark. The bookmark menu can be located in the top right corner of the Qlik Sense app overview. Create and save the ... Show MoreCreate the Bookmark

- Create a bookmark. The bookmark menu can be located in the top right corner of the Qlik Sense app overview.

- Create and save the bookmark

- Navigate back to the bookmark menu.

- Right-click the bookmark that you wish to share.

- Select Publish

You can also click Copy link - this will show a warning that the bookmark needs to be published. When confirming the warning, the bookmark will be automatically published.

Share the Bookmark

- Right-click the bookmark again.

- Select Copy link

- Share the link with anyone who has access to the application.

If the share option is not available or if users who should see the bookmarks cannot find it, verify that Sense has not been set up with Security Rules that disallow sharing or access to specific objects. See the attached document for details.

- Create a bookmark. The bookmark menu can be located in the top right corner of the Qlik Sense app overview.

-

Qlik Data Gateway: Unable to load MS Access (.accdb) data via Data Manager

Loading MS Access data (.accdb file) using generic ODBC (via Data Gateway) in Data Manager fails with the following error: Failed to add dataData coul... Show MoreLoading MS Access data (.accdb file) using generic ODBC (via Data Gateway) in Data Manager fails with the following error:

Failed to add data

Data could not be added to Data manager. Please verify that all data sources connected to the app are working and try adding the data again.The Direct Access ODBC Connector log reads:

Message=Error in HandleJsonRequest for method=getRawScript,

exception=Exception, error=ODBC Wrapper: Unable to execute SQLForeignKeys:

[Microsoft][ODBC Driver Manager] Driver does not support this function.

internalError=TrueResolution

Use the Data Load Editor to load MS Access data.

Loading file-based Access DBs through ODBC to a modern BI tool is not recommended, specifically with larger files. These are likely to underperform during the loading process.Cause

This error indicates the MS Access ODBC driver doesn’t support the SQLForeignKeys ODBC function. However, retrieving the table foreign keys is essential for Data Manager (as opposed to Data Load Editor).

Internal Investigation ID(s)

SUPPORT-4446

Environment

- Qlik Cloud Analytics

- Qlik Data Gateway

-

Talend Spark Stream Kerberos enabled Job not working with error IllegalStateExce...

A Talend Spark Stream Job configured with Yarn cluster mode and Kerberos enabled is encountering issues and failing to execute, presenting the followi... Show MoreA Talend Spark Stream Job configured with Yarn cluster mode and Kerberos enabled is encountering issues and failing to execute, presenting the following errors:

YarnClusterScheduler- Lost executor 3 on me-worker1.xxx.co.id: Unable to create executor due to Unable to register with external shuffle server due to : java.lang.IllegalStateException: Expected SaslMessage, received something else (maybe your client does not have SASL enabled?)

jaas.conf content =

Client {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/tmp/adm.keytab"

principal="cld_adm@XXX.CO.ID"

doNotPrompt=true;

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

keyTab="/tmp/adm.keytab"

principal="cld_adm@XXX.CO.ID"

doNotPrompt=true;

};Cause

The external shuffle service within YARN is configured to mandate SASL authentication; however, the Spark executor is either improperly configured to use SASL or is transmitting an incompatible message.

Common causes includes:

- The absence or inaccuracy of SASL configuration in either spark-defaults.conf or YARN's configuration file (yarn-site.xml).

- A version discrepancy between Spark and YARN, where the shuffle service necessitates a particular SASL protocol unsupported by the Spark client.

- Improper security configurations, including the omission of Kerberos credentials or incorrect settings for spark.authenticate and associated properties.

This error results in the executor's failure to register with the shuffle service, prompting the YarnClusterScheduler to mark it as lost.

Resolution

Ensure that the external shuffle service is enabled, and that the SASL settings are in accordance with YARN's configurations:

spark.authenticate true ( #not necessary spark.network.crypto.enabled true spark.network.crypto.saslFallback true )Environment

-

The new Straight Table and old Table (retired) in Qlik Analytics

The new Straight Table was moved into the new native section of charts from the visualization bundle. The new Straight Table offers many improvements ... Show MoreThe new Straight Table was moved into the new native section of charts from the visualization bundle. The new Straight Table offers many improvements from the old table, and we encourage everyone to start using the new table instead of the old one.

This article aims to answer any frequently asked questions around this switch, beginning with:

- The old table will not be removed anytime soon. It will continue to work until further notice. We encourage you to subscribe to the Qlik Support Blog and What's New in Qlik Cloud to stay ahead of updates.

- The old table will not be updated further. Use the new table to benefit from future improvements.

- We monitor your feedback and will improve the functionality of the new table.

FAQ

What improvements does the Straight table come with?

Here are some examples:

- Grid styling

- Totals styling

- Pagination or virtual scroll

- Scrollbars outside of the table

- Add, delete many fields at the same time

- Cell font family, size and color styling

- Font styling by expression

- Column width options pixels, percentages

- Chart exploration, end user can pick dimensions and measures

- Header on/off

- Zebra striping

- Null value styling

- Selection info in export

- Totals in export

- Titles in export

- Cyclic dimension controls in dropdown

Will the old table be removed?

No, the old table will continue to work in the foreseeable future. Any changes will be notified in advance. Regardless, we highly recommend upgrading your tables as soon as possible to enjoy the new Straight Table's new functions.

How do I convert to the new table?

The easiest way to convert your tables is to drop the new table chart onto the old one.

- First, copy the old table

- Edit the sheet that includes the Table (retired)

- Open the Charts pane

- Drag the Straight table over your table

- Choose Convert to: Straight table

The new table looks different and offers more functionality that must be enabled to be used.

When will the old table be deprecated?

Not anytime soon, it will be a soft fade out. The new Straight Table will be the preferred choice for all new applications, and we anticipate many will upgrade to benefit from new functionality. Over time, the usage of the old table will diminish until most applications are using the new table anyway.

Will this affect Qlik Sense Enterprise on Windows?

Qlik Sense Enterprise on Windows will have the table feature aligned with Qlik Cloud Analytics in a future release.

I’m not happy with X and Y of the new table, will that be changed?

We’re closely monitoring feedback on the new table and are dedicated to creating the best possible experience, including improvements to accessibility. We are committed to accessible standards so people with disabilities can use our products. Printing, the tables should, of course, print in as consistent a manner as possible. As for usability. we aim to enable experienced users to reach functionality quickly, while also not overwhelming new users.

Environment

- Qlik Analytics

-

Partner Guide: How to Prepare and Collaborate with Qlik Data Analytics Technical...

This article aims to help you facilitate the most effective way to collaborate with Qlik Support. It defines your responsibilities as a partner, inclu... Show MoreThis article aims to help you facilitate the most effective way to collaborate with Qlik Support. It defines your responsibilities as a partner, including reproducing issues, performing basic troubleshooting, and consulting the knowledge base or official documentation.

For the Qlik Talend guide, see Partner Guide: How to Prepare and Collaborate with Qlik Talend Technical Support.

Before contacting Qlik Data Analytics Technical Support, partners must complete the steps outlined in Qlik Responsible Partner Duties and should review the OEM/MSP Support Policy to understand the scope of support and the expectations on Partners.

- Partners are required to complete the steps outlined in this article before submitting a support case.

- It is the partner’s responsibility to answer the customer's questions to the best of their ability by referring to documentation, the Qlik Community, previous cases, and online resources before submitting a case or commenting on an existing one.

- For high-priority or urgent issues, it is acceptable to raise a ticket early. However, the responsibilities outlined in the article still apply at every stage of the case.

Content

- General Expectations Before Submitting a Case

- Isolate What Is Affected

- Provide Product and Version Information

- Reproduce the Issue in Your Environment

- Always Provide Complete (Not Partial) Logs

- Describe What You Have Already Investigated

- Submit All Related Files You Investigated Or Used For Replication

- Submit the Case Using Your Partner Account

- Partner Case Submission

- Template

- Summary of the Problem

- Customer Information

- Files Attached

- Summary of your Investigation

General Expectations Before Submitting a Case

Isolate What Is Affected

Identify which Qlik product, environment, product configuration, or system layer is experiencing the issue.

For example, if a task fails in Qlik Sense Enterprise on Windows, try running it directly in Qlik Sense Management Console to determine whether the problem is transient. Also, try running a different task to determine whether the problem is task-specific.

Similarly, if a reload fails in one environment (e.g., Production), inform the customer to try running it in another (e.g., Test) to confirm whether the issue is environment-specific.

Provide Product and Version Information

Always include the exact product name, version, and patch (or SR) the customer is using.

Many issues are version-specific, and Support cannot accurately investigate the issue without this information.If the product the customer is using has reached End of life (EOL), please plan the upgrade. If the issue can be reproducible on the latest version, please reach out to us so that we can investigate and determine whether it's a defect or working as designed.

For End of Life or End of Support information, see Product Lifecycle.

Reproduce the Issue in Your Environment

Partners are expected to recreate the customer’s environment (matching versions, configurations, and other relevant details) and attempt to reproduce the issue.

If you do not already have a test environment, please ensure one is set up. Having your own environment is essential for reproducing issues and confirming whether the same behavior occurs outside of the customer’s setup.

In addition, please test whether the issue occurs in the latest supported product version. In some cases, it may also be helpful to test in a clean environment to rule out local configuration issues. If the issue does not occur in the newer version or a clean setup, it may have already been resolved, and you can propose an upgrade as a solution.

See the Release Note for the resolved issues.

Regardless of whether the issue could be reproduced, please include:

- The exact Qlik Product version and environment information (OS, Browser, etc)

- The exact steps you followed

- Screenshots showing your process

- Any sample apps, data files, or configuration files used during testing

- A summary of your results (such as “Reproduced same error,” or “Could not reproduce – no error occurred”)

Always Provide Complete (Not Partial) Logs

While pasting a portion of the log into the case comment can help highlight the main error, it is still required to attach the entire original log file (using, for example, FileCloud).

Support needs the full logs to understand the broader context and to confirm that the partial information is accurate and complete.

It is difficult to verify the root or provide reliable guidance without full logs.

Additionally:

- Specify which logs you reviewed

- Confirm that the customer provided full, uncut logs, not only short fragments

- Include logs from both the customer's environment and your own reproduction tests

- If possible, change the log level to DEBUG temporarily and share it with us

Providing both sets of logs allows Support to see what testing has been done and whether the same error occurred during reproduction.

Describe What You Have Already Investigated

Please do not simply forward or copy and paste the customer’s inquiry.

As a responsible partner, you are expected to perform an initial investigation. In your case submission, clearly describe:

- What you reviewed (such as logs and configurations)

- What actions you took to isolate or reproduce the issue

- What you analyzed, tested, or attempted

- What you concluded or suspected based on your investigation (such as why you think it is a bug, why you think the problem is occurring)

Sharing this thought process:

- Helps Support understand your assumptions

- Avoids duplicated effort

- Demonstrates that the case is ready for advanced-level investigation

Even if the issue remains unresolved, outlining what you already tried helps Support move forward faster and more effectively.

Submit All Related Files You Investigated Or Used For Replication

Attach all relevant files you received from the customer and personally reviewed during your investigation, as well as all relevant files you have used when reproducing the steps.

Providing both the customer’s files and your reproduction files allows Support to verify whether the same issue occurred under the same conditions, and to determine if the problem is reproducible, environment-specific, or isolated to the customer's configuration.

This includes (but is not limited to):

- Log files (e.g. Qlik logs, Connector logs, Windows Event logs, Server Stats, Har file, etc, if necessary)

- Screenshots: If an error is visible on the UI, please include the error message and accessed page URL.

- Sample input files or test data

Submit the Case Using Your Partner Account

All support cases must be submitted using your official partner account, not the customer's account.

If you do not yet have a partner's account, contact Qlik Customer Support to request access and to receive the appropriate onboarding.

Partner Case Submission

Review the support policy and set the case severity properly. See

Qlik Support Policy and SLAsTemplate

This template helps guide you on what to include and how to structure your case.

Summary of the Problem

What happened? When did it happen? Where did it happen?

Clearly describe the event, including:

- A brief summary of the impact (e.g., "Unable to login to Qlik Sense Enterprise on Windows hub")

- Date and time the issue occurred (e.g., "Since July 10, between 13:50–13:55 JST")

- Product (e.g., "Qlik Sense on Windows Nov 2024 Patch 7")

- Environment: OS version, Browser, etc

Customer Information

Only include what is needed based on the case type.

Examples:

- Customer's Company Name

- License number

- Tenant information (only Qlik Cloud):

Find your Qlik Cloud Subscription ID and Tenant Hostname and ID

Files Attached

List the files you have included in the case and what each one is.

Summary of your Investigation

Explain what investigation was done before contacting support.

Examples:

- Logs/files you reviewed

- Patterns/errors observed

- Assumptions you made (e.g., suspected cause)

- Whether the issue is reproducible (If it's reproducible, describe the reproduction steps too)

- Troubleshooting steps attempted

Thank you! We appreciate your cooperation in following these guidelines.

This ensures that your cases can be handled efficiently and escalated quickly when necessary.